NVIDIA has just dropped a new family of enterprise-ready AI models — Nemotron Nano 2, designed to run 6× faster than rivals while handling massive 128K-token prompts on a single midrange GPU.

The release could shift how enterprises and researchers deploy advanced reasoning, coding, and multilingual AI — all without costly hardware setups.

Key Takeaways

- Nemotron Nano 2 runs 6× faster than similar AI models.

- Delivers 128K context on a single NVIDIA A10G GPU.

- Outperforms peers in reasoning, coding, and math benchmarks.

- NVIDIA open-sources training data and recipes for reproducibility.

- Aimed at lowering costs and scaling enterprise AI deployment.

NVIDIA’s Nemotron Nano 2 is a new family of hybrid Mamba-Transformer AI models, offering up to 6× faster inference than competitors. It supports 128K context windows on a single midrange GPU and ships with open training data, making advanced reasoning and enterprise-scale AI more accessible and cost-efficient.

NVIDIA Pushes AI Forward with Nemotron Nano 2

NVIDIA has unveiled Nemotron Nano 2, a production-ready family of large language models (LLMs) that promises up to 6.3× faster throughput than rivals of similar size. Beyond speed, the models bring 128K context capability on a single NVIDIA A10G GPU (22GiB) — a breakthrough for enterprises that rely on long-context reasoning and coding tasks.

“This release represents a new chapter in AI efficiency — both in throughput and transparency,” said Bryan Catanzaro, VP of Applied Deep Learning Research at NVIDIA (NVIDIA, 2025).

Hybrid Design: Mamba Meets Transformer

At the heart of Nemotron Nano 2 is a hybrid Mamba-Transformer architecture. About 92% of the layers use Mamba-2 state-space models, with only a small fraction leveraging self-attention. This mix allows the models to sustain long-sequence reasoning while generating tokens at unprecedented speed.

Transparency in Training: Data Open-Sourced

Unlike many competitors, NVIDIA is openly publishing much of its training data and recipes. The dataset spans math (133B tokens), multilingual web content, STEM domains, and curated GitHub code. This move is seen as a push to raise the bar for reproducibility in AI research.

“Transparency is no longer optional in AI development — it’s a trust factor,” noted Nathan Benaich, founder of Air Street Capital (Air Street Capital, 2024).

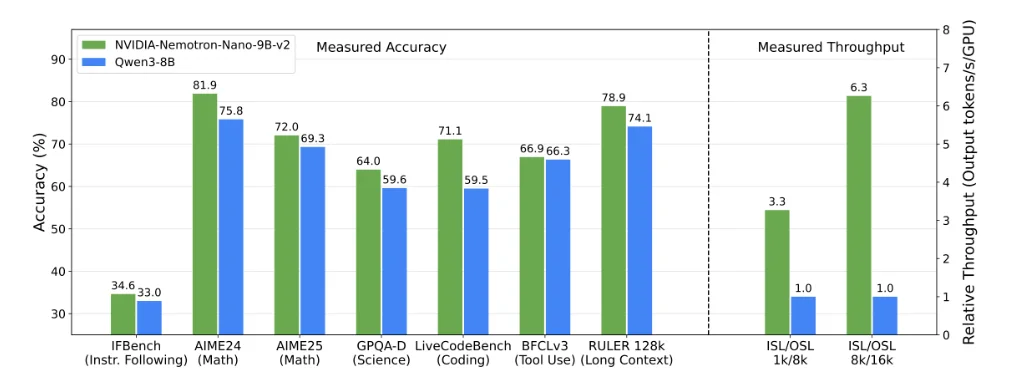

Benchmarks: Outpacing Rivals

The results speak for themselves. In reasoning, math, and multilingual tasks, Nemotron Nano 2 consistently surpasses peers:

| Benchmark | Nemotron Nano 2 (9B) | Qwen3-8B | Gemma3-12B |

| GSM8K (Math, CoT) | 91.4 | 84.0 | 74.5 |

| MATH Dataset | 80.5 | 55.4 | 42.4 |

| HumanEval+ (Coding) | 58.5 | 57.6 | 36.7 |

| RULER-128K (Long Context) | 82.2 | – | 80.7 |

These numbers make it clear: Nemotron Nano 2 isn’t just fast — it’s state-of-the-art in reasoning and math-heavy workloads, all while running efficiently on accessible hardware.

Why It Matters

Nemotron Nano 2 is designed to run efficiently on affordable hardware. By keeping the model compact (9B parameters) yet powerful, NVIDIA is enabling startups, universities, and enterprises with modest GPU infrastructure to deploy advanced AI at scale.

In practical terms, it lowers costs while widening access to AI-driven education, coding assistants, multilingual chatbots, and enterprise reasoning systems.

Numbers to Watch

- 6.3× faster throughput vs. Qwen3-8B.

- 128K context length on a single A10G GPU.

- 133B math tokens in open dataset.

What’s Next

- Wider adoption in enterprise workflows requiring long-context reasoning.

- Potential integration into AI coding copilots and STEM tutoring platforms.

- Further hybrid architecture innovations blending Mamba-2 and transformers.

- Acceleration of open-source AI ecosystem with shared training recipes.

Conclusion

NVIDIA’s Nemotron Nano 2 release is more than just another model drop — it’s a blueprint for cost-effective AI at scale. By combining speed, transparency, and accessibility, it positions NVIDIA to shape the future of enterprise-ready AI.

Tomorrow’s question: Will other AI labs follow NVIDIA’s lead on open data, or stick to closed walls?