When Google talks about “privacy,” the world tends to listen with one raised eyebrow. But this time, the tech giant might have built something that actually earns that trust.

This week, Google unveiled Private AI Compute, a new way to run its most powerful Gemini AI models in the cloud — without exposing your personal data to anyone, not even Google itself. The platform could change how cloud AI operates, promising on-device-level privacy with cloud-scale performance.

The end of the on-device vs. cloud trade-off

For years, AI assistants have lived in a tug-of-war: process data locally to protect privacy, or send it to the cloud to access bigger brains. Google’s answer is simple — why not both?

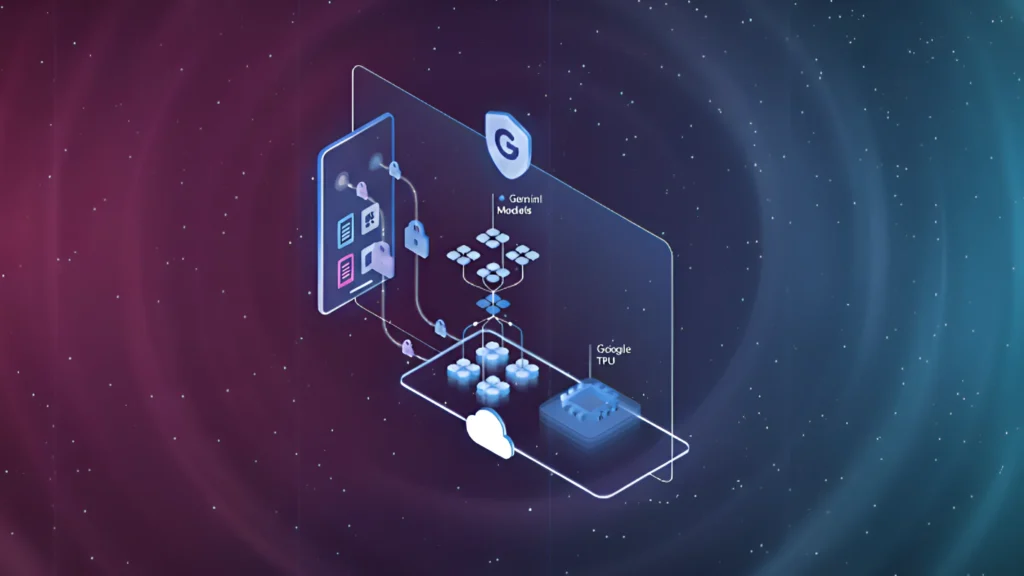

Private AI Compute acts as a sealed vault in the cloud. Your data gets encrypted, processed inside what Google calls a hardware-secured “enclave”, and then erased. Even Google’s engineers can’t peek in. It’s a blend of confidential computing, trusted execution environments, and encryption at every hop, built on its custom Tensor Processing Units (TPUs) and Titanium Intelligence Enclaves (TIE).

According to Jay Yagnik, Google’s VP of AI Research, the system uses “remote attestation” — a sort of digital handshake that verifies your device is talking only to an authentic, unmodified server inside that secure environment. “Sensitive data processed by Private AI Compute remains accessible only to you,” he said.

Pixel 10 gets first dibs

The rollout begins quietly, tucked into the next-gen Pixel 10 lineup. The Magic Cue assistant will now pull more contextual suggestions, while the Recorder app can summarize transcripts in more languages — both powered by Private AI Compute.

That may sound minor, but it’s a big architectural shift. Instead of cramming everything on-device, your phone now leans on a Gemini cloud model — securely — to reason and summarize at a depth that previously required far more compute.

In short: your phone gets smarter, but your data stays yours.

How Google keeps itself out of your business

Private AI Compute builds on Google’s Secure AI Framework (SAIF) — a sort of internal rulebook for privacy-by-design systems.

Under the hood, the tech uses a few clever mechanisms to wall off data:

- Ephemeral sessions — Once a task finishes, all input and computation are deleted.

- Binary authorization — Only verified, signed code can run.

- Zero shell access — Even administrators can’t poke inside running workloads.

- Memory encryption + IOMMU isolation — Prevents physical data leaks.

An independent review by cybersecurity firm NCC Group reportedly found minor timing-based vulnerabilities but rated the system “low risk,” citing Google’s extensive noise and attestation layers that make correlation attacks extremely difficult.

Still, the fact that Google invited an external audit at all is notable — and somewhat rare in Big Tech’s privacy playbook.

Why this matters

Google’s announcement doesn’t live in a vacuum. Both Apple and Meta have recently rolled out similar privacy-preserving cloud infrastructures. The difference: Google is bringing its Gemini foundation models directly into this sealed environment, which means far more capability than what lives on your phone’s chip.

If the system scales, it could reshape how privacy works in the AI era — especially as regulators tighten their grip. Europe’s GDPR and upcoming AI Act demand provable data isolation, and Google’s move looks designed to pre-empt that pressure.

In a sense, this could be the company’s bid to prove that “helpful AI” doesn’t have to mean “surveillance AI.”

The bigger picture

The industry is heading toward what some call hybrid AI — the middle ground between device and cloud, where compute power meets confidentiality.

Google’s Private AI Compute might be the first major proof-of-concept at scale. If successful, it could give developers and enterprises a way to run advanced AI workloads without ever surrendering sensitive data to the cloud provider.

That’s a radical shift for Google, a company often criticized for its data-driven business model.

And if users actually start trusting Google’s AI because of it? That might be the most transformative upgrade of all.

Conclusion

Google’s Private AI Compute could mark a turning point in the privacy-tech arms race.

It blends Gemini-level intelligence with end-to-end encryption and hardware-sealed enclaves, hinting at a future where cloud AI doesn’t automatically mean compromised privacy.

The question now is simple — will users believe Google finally got privacy right?