Every year, Google launches products.

But 2025 was different.

This wasn’t about incremental upgrades or flashy demos. It was about foundations. Google spent 2025 rebuilding how AI works across search, Android, creativity, education, hardware, and science.

By December, it became clear:

Google is no longer adding AI to products. The products are AI.

This article is a complete, chronological, and human-written breakdown of everything Google launched in 2025, from January through December. No hype. No fluff. Just clarity, context, and impact.

Why Google’s 2025 Launches Matter More Than They Seem

- Google shifted AI from experiments to infrastructure

- Most updates were quiet but permanent

- These launches shape how billions use technology daily

- This is Google positioning itself for the next decade, not the next headline

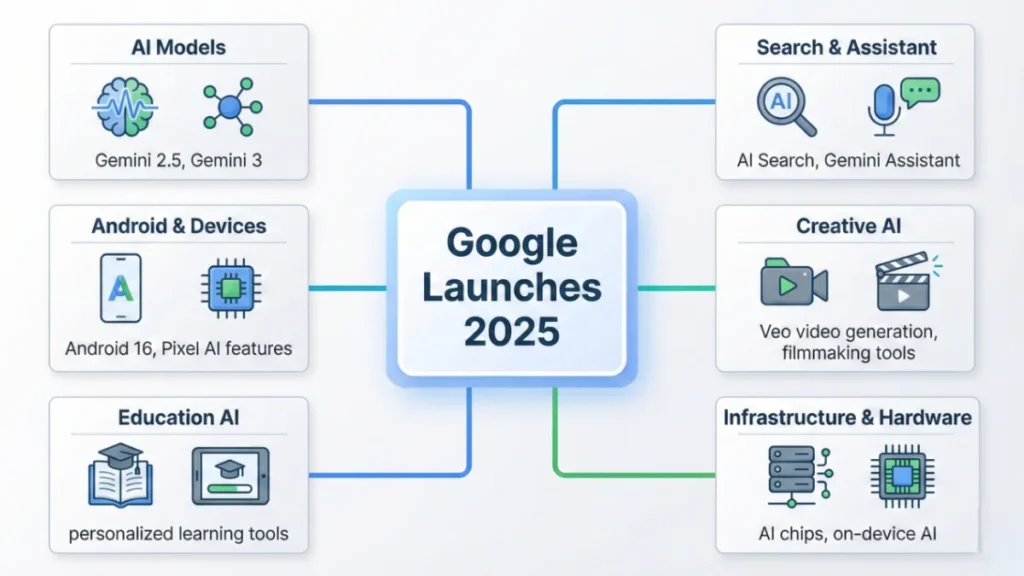

Google’s 2025 AI Strategy in One Minute

- Gemini became Google’s core intelligence layer

- Search evolved into a research assistant

- Android integrated AI at the OS level

- Creative tools matured into production-ready systems

- Hardware was redesigned around inference

Quick Snapshot: Google Launches in 2025

- AI Models: Gemini 2.5, Gemini 3, Veo 3, DolphinGemma

- Hardware: Pixel 10, Ironwood TPU, Gemini-powered Home devices

- Software: Android 16, AI Search Mode, Gemini CLI

- Education: New Google AI Education Tools

- Creative Tools: Flow, Veo filmmaking suite

- Search & Travel: AI Flight Deals, Search Live

Now let’s explore them month by month.

January–March 2025: Google Sets the Tone for the AI Year

Gemini 2.5 – Google’s Most Intelligent AI Model

In March, Google introduced Gemini 2.5, positioning it as its most capable and intelligent AI model to date.

This release mattered because it wasn’t built for demos.

It was built for reasoning.

What Gemini 2.5 brought to the table:

- Stronger multi-step logical reasoning

- Improved mathematical accuracy

- Longer context windows

- Reduced hallucinations

- Better alignment with human intent

Gemini 2.5 became the core brain behind many Google systems in 2025, especially Search, research tools, and enterprise AI.

This was Google signaling confidence. Not noise.

Gemma 3 – Powerful AI for Developers Without Massive Infrastructure

While Gemini 2.5 targeted scale, Gemma 3 focused on accessibility.

Gemma 3 is optimized to run efficiently on:

- A single GPU

- A single TPU

- Limited infrastructure environments

Why Gemma 3 mattered:

- It empowered startups and indie developers

- It reduced dependency on large cloud budgets

- It expanded Google’s open AI ecosystem

In simple terms, Google said: You don’t need a data center to build meaningful AI.

AI Mode in Google Search – A Quiet Revolution

One of the most impactful launches of the year didn’t come with a flashy event.

AI Mode fundamentally changed how people search.

Instead of only links, users now see:

- AI-generated summaries

- Contextual answers

- Follow-up exploration prompts

- Synthesized information across sources

This marked the transition of Google Search from a directory to an intelligent research assistant.

February–April 2025: Science, Nature & Creativity Collide

New AI System for Scientists

Before consumer tools, Google focused on research.

In February, Google launched a specialized AI system for scientists, designed to support:

- Complex simulations

- Large-scale data analysis

- Hypothesis generation

- Pattern discovery

This system is already being used in:

- Medical research

- Climate modeling

- Physics and chemistry labs

It reinforced Google’s long-standing belief that AI’s biggest impact starts in science.

DolphinGemma – Decoding Dolphin Communication

One of the most fascinating launches of 2025 was DolphinGemma.

This AI model is designed to analyze dolphin vocalizations and behavioral patterns, helping researchers:

- Identify communication structures

- Decode repeated sound patterns

- Understand social signaling

It’s rare for an AI launch to feel poetic.

This one did.

Veo 2 – Google Enters Serious AI Video Generation

In April, Google released Veo 2, its first serious leap into AI-generated video.

Veo 2 introduced:

- Cinematic camera motion

- Scene consistency

- Realistic lighting and depth

- Prompt-driven storytelling

This wasn’t a toy.

It was a foundation for AI filmmaking.

May 2025: Google Rewrites the Rules of Creative AI

Veo 3 & Veo 3.1 – AI Filmmaking Grows Up

In May, Google expanded its video ambitions with Veo 3 and later Veo 3.1.

These models added:

- Narrative continuity across scenes

- Shot-to-shot consistency

- Film-grade motion logic

For the first time, AI could maintain a story, not just generate clips.

Flow – Google’s AI Filmmaking Tool

Models are powerful. Tools make them usable.

Flow is where creators actually interact with Veo.

Flow allows users to:

- Write scenes in natural language

- Generate full sequences

- Edit with AI assistance

- Maintain creative control

This democratized filmmaking in a way traditional tools never did.

Google AI Ultra – A Premium AI Subscription

Advanced users gained access to Google AI Ultra.

This plan bundles:

- Highest-tier Gemini access

- Early experimental features

- Priority compute resources

It’s aimed at professionals who live inside AI tools daily.

June–August 2025: Android, Search & Real-Time Intelligence

Android 16 – AI at the OS Level

With Android 16, Google deeply embedded AI into the operating system itself.

Key improvements:

- Smarter notifications

- On-device AI processing

- Enhanced privacy controls

- Better battery efficiency through AI optimization

Android stopped feeling reactive.

It became predictive.

Gemini CLI – AI for the Command Line

Developers received Gemini CLI, an open-source AI agent that works directly in the terminal.

It can:

- Write scripts

- Debug code

- Explain system output

- Automate workflows

This made AI feel native to development environments.

Search Live – Real-Time Voice Exploration

Search Live introduced conversational, real-time search.

Users can now:

- Ask follow-up questions verbally

- Explore topics dynamically

- Receive live contextual responses

Search became dialogue.

Deep Think – Slower, Smarter AI Reasoning

Deep Think gave Gemini the ability to pause and reason before answering.

This reduced errors and improved complex problem-solving, especially in:

- Planning tasks

- Technical explanations

- Multi-constraint reasoning

Sometimes, slower is smarter.

Flight Deals – AI-Powered Travel Planning

Flight Deals uses predictive AI to surface optimal booking windows and pricing.

For travelers, this replaced hours of manual searching.

April–October 2025: Hardware Built for AI

Ironwood – Google’s TPU for the Age of Inference

Ironwood is Google’s first TPU designed specifically for inference.

Why that matters:

- Faster real-time responses

- Lower operational cost

- Massive scale efficiency

Inference is where AI lives in production. Ironwood was built for that reality.

Gemini Computer Use Model – AI That Operates Interface

In October, Google unveiled a Gemini model capable of controlling computer interfaces.

It can:

- Click buttons

- Fill forms

- Navigate applications

- Perform multi-step workflows

This is foundational for autonomous agents and future AI assistants.

New Google Home Devices – Built for Gemini

These devices are designed around Gemini, not retrofitted.

They understand:

- Context

- Multi-speaker households

- Complex commands

Smart homes finally became intelligent.

Nano Banana & Nano Banana Pro

Strange name. Serious capability.

Nano Banana delivers fast, lightweight AI interactions across:

- Google Search

- Google Photos

- NotebookLM

It’s micro-AI at scale.

August–December 2025: The Next Generation Arrives

Pixel 10 – Google’s Most AI-First Phone

Pixel 10 is built around on-device Gemini.

Features include:

- Smarter photography

- Voice-first interactions

- Offline AI processing

Pixel 10 feels less like a phone and more like a companion.

Gemini 3 – The Next Generation of Google AI

Gemini 3 represents a major leap in:

- Reasoning depth

- Emotional alignment

- Adaptability

This is the model Google will build on for years.

Gemini 3 Flash – Frontier Intelligence, Built for Speed

Closing the year, Gemini 3 Flash prioritized speed.

Perfect for:

- Mobile experiences

- Real-time assistants

- High-volume applications

It balanced intelligence with immediacy.

Google Education Tools – AI for Learning in 2025

Google launched a new suite of AI education tools designed for 2025 classrooms.

They support:

- Personalized learning paths

- AI-assisted lesson creation

- Real-time feedback

- Accessibility improvements

This could quietly become one of Google’s most impactful launches.

Conclusion: What Google’s 2025 Launches Really Mean

Google didn’t chase trends in 2025.

It defined direction.

AI became calmer.

More useful.

More human.

From Gemini models to Android, Search, education, hardware, and science, Google spent 2025 building the infrastructure of the next decade.

FAQs: Google Launches 2025

What was Google’s biggest launch in 2025?

Gemini 3 stands out as the most significant due to its next-generation intelligence.

Did Google release new hardware in 2025?

Yes. Pixel 10, Ironwood TPU, and Gemini-powered Home devices were launched.

Is Gemini replacing Google Assistant?

The new Android Assistant is deeply integrated with Gemini, making it far more capable.

What is Google’s AI video model called?

Veo 2, Veo 3, and Veo 3.1 are Google’s AI video generation models.

Are Google’s education AI tools free?

Many features are included in Google’s education ecosystem, with advanced options available.

What makes Android 16 different?

AI is embedded at the operating system level with stronger privacy and on-device intelligence.

What is Gemini CLI used for?

It allows developers to use AI directly in the command line for coding and automation.