X is testing a new idea that feels very on-brand for the platform’s evolving identity: let AI write first, then let the internet argue it into something useful.

On February 5, 2026, Community Notes introduced Collaborative Notes, a feature that allows AI to generate an initial explanatory note on a post—after which human contributors refine, correct, and rate it together in real time.

It’s not just a feature update. It’s a shift in how public context might be produced at scale.

From blank page to crowd-edited context

Until now, Community Notes relied entirely on humans to write explanatory notes from scratch. That system worked—but slowly. Writing accurate, neutral context takes time, and misinformation doesn’t wait.

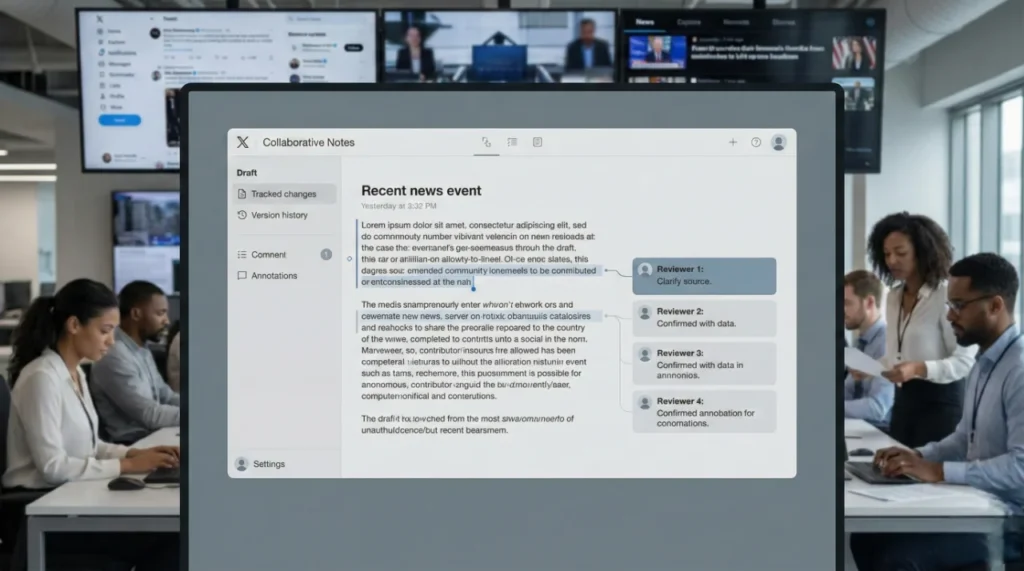

Collaborative Notes flips the workflow. When a contributor requests a note, AI produces a draft based on publicly available information. That draft then enters a collaborative phase where approved contributors can suggest edits, flag issues, and rate its helpfulness.

Nothing gets published automatically. As with traditional Community Notes, a note only goes live if contributors with differing viewpoints broadly agree it adds value.

X is clear about one thing: AI starts the conversation, but humans still control the outcome.

Why X is betting on AI-assisted fact writing

The logic is straightforward. AI is fast. People are careful.

By combining the two, X hopes to increase the volume of high-quality notes without sacrificing the core principle that made Community Notes credible in the first place: consensus across ideological lines.

In practice, this could mean faster responses to viral claims, breaking news, and misleading posts—areas where Community Notes has sometimes lagged simply because contributors couldn’t keep up.

The move also mirrors how many newsrooms already operate: drafts are cheap, editing is where the real work happens.

Transparency as a product strategy

Collaborative Notes fits into a broader trend across tech platforms: making moderation more visible rather than more authoritative.

Unlike traditional fact-checking labels, which are often applied behind the scenes, this system is intentionally public. Contributors can watch a note improve—or fall apart—in real time. Ratings are visible. Revisions are traceable.

That openness is part of the pitch. X is positioning Community Notes not as a referee, but as a process.

The risks aren’t hypothetical

There are real concerns. Early AI drafts could subtly frame an issue in ways that influence later edits. Contributors may anchor on the initial wording, even if it’s flawed. And coordinated groups could still attempt to steer outcomes, despite consensus safeguards.

X says Collaborative Notes will roll out gradually, with ongoing evaluation. Details about the specific AI model used for drafting haven’t been disclosed yet.

What this signals for the future of platforms

Collaborative Notes suggests that X sees trust not as something imposed from above, but negotiated in public—with AI acting as an accelerator, not an authority.

If the experiment works, it could become a template for how large platforms handle context at scale: fast, collaborative, and visibly human-controlled.

If it doesn’t, it will reinforce a harder truth—that even with AI, trust is still the most difficult product to ship.

X isn’t replacing human judgment with AI. It’s testing whether AI can make human judgment faster—and whether the crowd is willing to take the wheel.