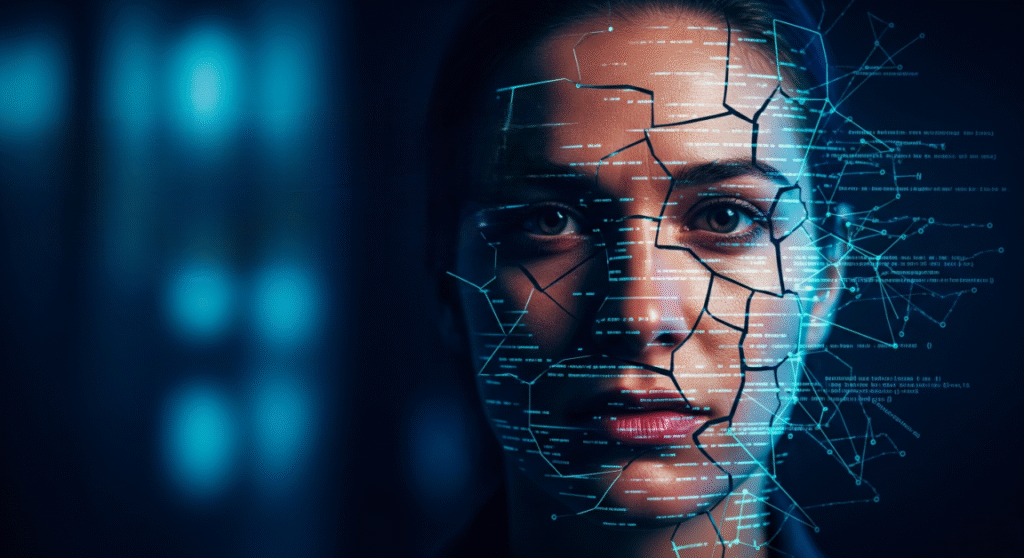

An innocent Indian homemaker had her face stolen and turned into a viral AI-generated influencer—without her knowing. The digital mask, built for revenge, led to millions of views, massive profits, and a disturbing wake-up call about how dangerous AI deepfakes have become.

Key Takeaways:

- A viral Instagram AI influencer “Babydoll Archi” was based on a real woman’s face without her knowledge.

- The profile was allegedly created by her ex-boyfriend using AI tools like ChatGPT and Dzine.

- The fake account amassed 1.4 million followers and generated around ₹1 million.

- Police have arrested the accused and seized devices, but the harm done is irreversible.

- The case highlights serious gaps in legal protections and digital safety, especially for women.

AI Revenge Porn: Indian Woman’s Face Used in Deepfake Ero-Influencer Profile

In a disturbing turn of events that’s fueling debate around AI misuse and online safety, an Indian woman from Assam found her face at the center of an erotic deepfake scandal—without ever stepping foot on Instagram.

The woman, known only as Sanchi, had no idea her private photos were being used by her ex-boyfriend to create “Babydoll Archi,” an AI-generated influencer whose seductive dance videos and glam photoshoots racked up 1.4 million followers. At the peak of her virality, Babydoll Archi even appeared in content with American adult star Kendra Lust, further fuelling speculation—and obsession.

But none of it was real.

According to Assam Police, Sanchi’s former partner, Pratim Bora, a mechanical engineer and self-taught AI enthusiast, used tools like ChatGPT and Dzine to fabricate content and build the illusion of a living, breathing online persona. His motive? “Pure revenge,” said senior officer Sizal Agarwal, who’s leading the investigation.

The Shocking Discovery

The truth unraveled only when Sanchi’s family noticed viral photos that looked eerily like her—and lodged a formal complaint with the police. At the time, they had no idea who was behind the account.

Once Instagram provided user details, the trail led directly to Bora, who was arrested on July 12. Police seized his laptop, smartphones, external drives, and even bank records, which revealed he had monetized the account, reportedly earning over ₹1 million from subscriptions and AI content.

“He made ₹3 lakh in just five days before his arrest,” Officer Agarwal noted.

The Cost of Digital Deception

The psychological toll on Sanchi and her family has been immense. “She’s extremely distraught,” said Agarwal. “But they’re now receiving counseling and slowly recovering.”

Sanchi’s case illustrates a dark side of technology where AI deepfakes aren’t just fantasy play—they can weaponize personal images, create false identities, and profit off someone’s likeness without consent.

What’s even more terrifying? Sanchi had no social media presence. She was completely unaware of her digital doppelgänger until it blew up online. Her entire family had been blocked from the account.

Legal Grey Zones and Ethical Dilemmas

The accused, Bora, now faces multiple charges under Indian cybercrime and harassment laws—including sexual harassment, distribution of obscene material, identity forgery, and defamation. If convicted, he could serve up to 10 years in prison.

But the deeper concern is this: Is the law equipped to handle the rise of AI-generated abuse?

Meghna Bal, a lawyer and AI expert, says the law is there but enforcement and awareness lag. “Women have always faced image-based abuse. AI just makes it easier—and often untraceable.”

Victims often don’t even know they’re being impersonated. And even when deepfakes are reported, platforms like Instagram and Meta are slow to act. As of now, the original Babydoll Archi account with 282 posts has been removed, but duplicates still circulate online.

Bal points out that Sanchi could seek the “right to be forgotten” in court—but fully erasing a viral digital presence is nearly impossible.

Why This Matters Now

This case is not just about one woman. It’s a warning flare for the world. As AI tools become easier to use, anyone’s face can be stolen, manipulated, and monetized—without their consent or knowledge.

While tech companies race to innovate, safeguards for ordinary users, especially women, remain dangerously underdeveloped.

Conclusion

Sanchi’s nightmare is a chilling example of what happens when technology outpaces ethics. And unless there are clear legal frameworks, proactive content moderation, and better education around digital identity theft, more lives could be torn apart by invisible crimes—and people might never even know.