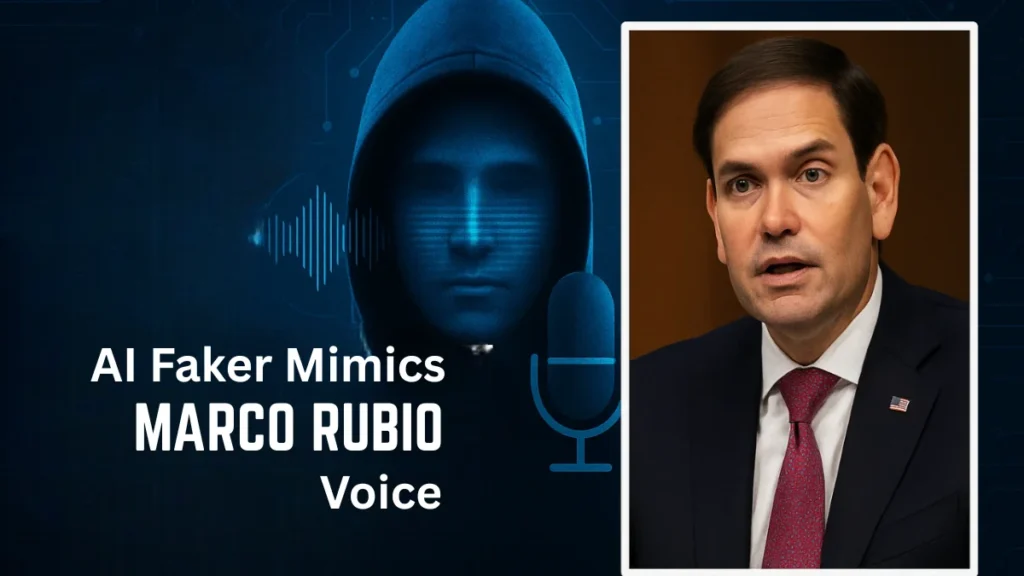

An audacious scammer used AI to mimic Marco Rubio’s voice and writing, fooling high-level officials. Behind the digital mask: a sinister quest for secrets or access.

In a digital con job blending espionage tactics and cutting-edge tech, an impostor posing as Secretary of State Marco Rubio has reached out to top U.S. and foreign officials, leveraging artificial intelligence to fake Rubio’s voice and writing style.

The operation, which began in mid-June, has rattled Washington and spurred fresh concerns about AI’s growing role in political deception and cybercrime.

A cable obtained by The Washington Post and confirmed by senior U.S. officials reveals the impostor contacted at least five prominent figures, including three foreign ministers, a U.S. governor, and a member of Congress, through voice and text messages.

Key Takeaways:

- An impostor used AI to mimic Marco Rubio’s voice and writing style.

- At least five high-ranking officials were targeted, both U.S. and foreign.

- FBI and State Department are investigating possible espionage motives.

- AI scams are becoming easier—and more convincing—with cheap tools.

- Officials warned not to trust calls or texts—even from known names.

Here’s how the plot unfolded

The scammer reportedly created a Signal account labelled “Marco.Rubio@state.gov”, a fake display name rather than a real email, to contact unsuspecting diplomats and politicians. The messages often included voicemails, some inviting targets to chat further on Signal.

“Voicemails are particularly effective because they’re not interactive,” said Hany Farid, a digital forensics expert at the University of California, Berkeley. “With just 15 to 20 seconds of real audio, you can train an AI model to say anything.”

Officials suspect the goal was to trick powerful people into sharing sensitive information or credentials—a chilling new tactic in the age of deepfakes and synthetic media.

The State Department acknowledged the scheme, confirming it’s conducting a thorough investigation. So far, the names of the targeted officials and the specific contents of the fake messages remain under wraps.

Not an Isolated Case

Rubio’s case isn’t unique. May saw an equally dramatic breach when someone hacked the phone of White House Chief of Staff Susie Wiles and impersonated her, contacting senators, governors, and executives. That incident prompted an FBI probe and raised alarms about AI-driven social engineering.

Despite growing risks, many officials still use apps like Signal for sensitive discussions. The appeal? End-to-end encryption and user-friendly design. But experts warn that even secure platforms can’t prevent an attacker from pretending to be a trusted person.

Earlier this year, former National Security Adviser Michael Waltz accidentally added a journalist to a Signal group chat discussing classified U.S. military plans for Yemen—a blunder that ultimately led to his resignation and a clampdown on Signal for national security communications.

Even after those high-profile slip-ups, officials continue to rely on the app for both personal and professional messages. It’s a dangerous convenience.

How Easy Is It to Fake a Voice?

While AI voice cloning might sound futuristic, it’s shockingly easy—and cheap. “Upload a few seconds of audio to a commercial service, click that you have permission, and type whatever you want them to say,” Farid explained. The result? Voicemails are indistinguishable from the real person’s voice.

Once scammers possess phone numbers linked to an official’s Signal account, the door is wide open for impersonation. In the case of Rubio, the attackers leveraged both text messages and voice calls, increasing the odds of convincing their targets.

FBI Sounds the Alarm

The FBI issued a warning in May about malicious actors impersonating senior U.S. officials through AI-generated texts and voice messages. These scams aim to either extract sensitive information, steal money, or install malware.

“If you receive a message claiming to be from a senior U.S. official,” the FBI cautioned, “do not assume it is authentic.”

Cyber deception isn’t just an American problem. Ukraine’s Security Service recently reported Russian agents impersonating officials to recruit civilians for sabotage. In Canada, scammers have mimicked government leaders’ voices to steal money or plant spyware.

A New Era of Political Deepfakes

Rubio, now serving as Trump’s national security adviser, finds himself at the centre of a broader cybersecurity storm. Experts worry we’re entering an era where any politician’s voice or image can be hijacked, turning trust into a weapon.

The State Department has urged its employees to report any suspicious attempts to the Bureau of Diplomatic Security. Non-State Department officials are encouraged to contact the FBI’s Internet Crime Complaint Centre.

For now, it’s unclear whether any of the officials responded to the fake Rubio’s messages, or how close the scammer came to gathering valuable secrets.

But one thing is certain: AI-powered impersonations are no longer science fiction. They’re here—and targeting the world’s most powerful people.