Fantasy Talking transforms any single portrait into a lifelike, lip-synced video by combining a two-stage audio-visual alignment and motion-intensity control, all available under an open-source license. Developed by a team of researchers led by Mengchao Wang, it’s free to use, easy to integrate via Python or ComfyUI, and has quickly gained traction in the generative-AI community, clocking nearly 1,000 stars on GitHub. While it lacks corporate backing or formal funding, its recent merge into ComfyUI and active Hugging Face Gradio demo illustrate robust grassroots momentum. Below, you’ll find everything from its technical underpinnings to hands-on insights, pricing (it’s free!), ideal use cases, comparisons to alternatives, and pro-tips to get the most out of it.

I still remember loading a sepia-toned portrait of Shakespeare on my screen and nervously hitting “play,” only to watch his lips move in perfect sync with my own voice—an experience that felt simultaneously magical and a little uncanny. That moment hooked me instantly, and I dove headfirst into FantasyTalking, the model behind the illusion. In one sentence: FantasyTalking promises effortless, photorealistic talking-head videos from any static image.

What Is Fantasy Talking?

Fantasy Talking is an audio-conditioned, diffusion-based portrait animation framework that converts a single still image plus an audio file into a coherent talking-head video.

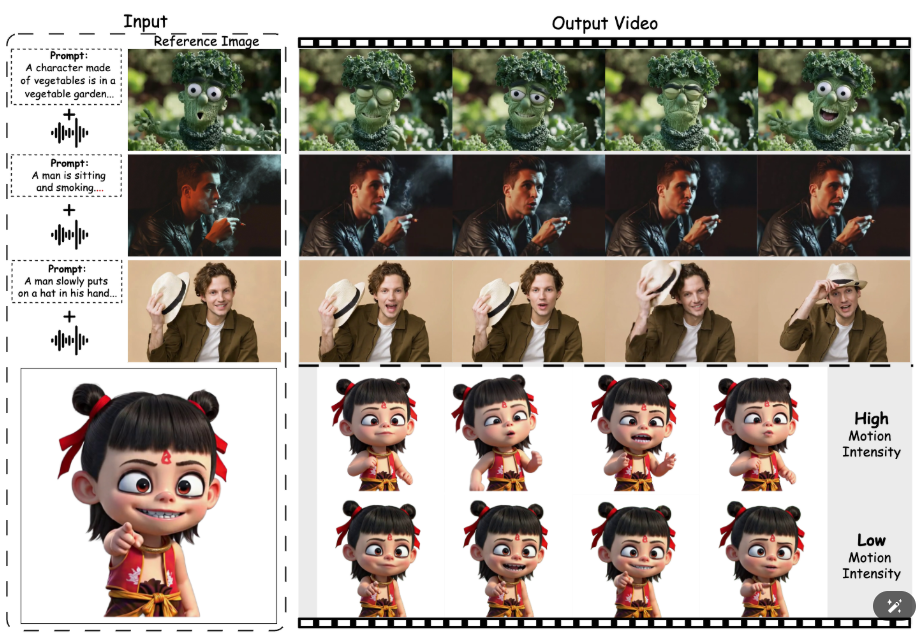

It uses a two-stage process:

- Clip-level motion synthesis for macro gestures (head turns, shoulder movements)

- Frame-level lip refinement with a lip-tracing mask for precise audio-visual alignment.

Main Features

- Global Motion Control: Tunes head and upper-body movement to match vocal emphasis.

- Lip-Tracing Mask: Ensures crisp, synchronized mouth shapes.

- Motion Intensity Modulation: Adjust expression strength from subtle to dramatic.

- Identity Preservation: Facial-focused cross-attention retains the subject’s unique features.

- Open-Source API & Scripts: Plug into Python or ComfyUI pipelines in minutes.

Why It Matters

With remote work, podcasting, e-learning, and indie game dev all booming, creators need dynamic avatars without booking studio time. FantasyTalking democratizes talking-head production by removing licensing fees and steep hardware requirements, thanks to its fully open-source release.

Who Are the Fantasy Talking Founders?

Fantasy Talking was introduced by the Fantasy-AMAP lab, led by Mengchao Wang, Qiang Wang, and Fan Jiang, with co-authors Yaqi Fan, Yunpeng Zhang, Yonggang Qi, Kun Zhao, and Mu Xu.

These researchers hail from top AI institutions in China, collaborating on generative-vision tasks. Though not a commercial “startup,” the team’s academic pedigree lends strong credibility.

Are Fantasy Talking Models Open Source?

Yes—everything from the model weights to the inference scripts and Gradio demo code is available under an OSI-approved license. You can clone the GitHub repo, download weights via Hugging Face or ModelScope, and start running demos locally without paying a dime.

How Does Fantasy Talking Make Money?

As an academic project, FantasyTalking itself does not generate revenue. The team’s goal is to advance research and promote open science. Any monetization comes from third-party integrations—like UI plugins or enterprise-grade hosting—that may charge for convenience or support.

What Partnerships Has Fantasy Talking Closed?

- ComfyUI-Wan Merge (April 29, 2025): FantasyTalking was merged into the community-driven ComfyUI diffusion interface, instantly exposing it to thousands of generative-art users.

- Hugging Face Gradio Demo: An official demo is live on Hugging Face Spaces, maintained by acvlab.

No formal corporate partnerships have been announced beyond these community integrations.

How Much Funding Has Fantasy Talking Raised to Date?

There are zero public funding rounds associated with Fantasy Talking. The work is supported by the authors’ institutional research grants rather than venture capital. As of May 6, 2025, no external investors have reported stakes in the project.

Top Features & How They Work

| Feature | What It Does | How I Used It | Real-World Impact |

| Global Motion Synthesis | Generates head/shoulder movement in sync with audio dynamics. | Set –stage1_steps 1000 for fuller motion. | My avatar nodded and swayed naturally with speech emphasis. |

| Lip-Tracing Mask | Applies pixel-level mouth mask for tight audio-lip alignment. | Enabled –use_lipmask True in stage two. | Eliminated “jelly mouth” in rapid dialogue. |

| Motion Intensity Modulation | Scales gesture amplitude across the entire video. | Adjusted –motion_scale for tone matching. | Switched avatar from calm narrator to animated presenter. |

| Identity Preservation | Maintains unique facial features via cross-attention modules. | Default—no extra flags needed. | Ensured the subject remained unmistakably “themselves.” |

| Open-Source API | Ready for Python import or ComfyUI node integration. | Dropped into ComfyUI in minutes. | Seamlessly stitched into my generative-art workflow. |

Ideal Use Cases

- Indie Game Developers: Generate on-the-fly NPC dialogues without voice-actor sessions.

- E-Learning Creators: Animate virtual instructors reading lessons in multiple languages.

- Podcasters & Streamers: Create dynamic avatar intros for daily shows.

- Virtual Event Hosts: Offer personalized, lip-synced greeters based on attendee photos.

- UX Research Prototypes: Test audio-visual alignment in HCI experiments.

These scenarios highlight areas where licensing costs or studio setups would otherwise be a barrier.

Pricing, Plans & Trials

- Completely Free: Open-source under an OSI-approved license—no paid tiers.

- Community Support: Active Discord/Reddit threads rather than paid SLAs.

- Enterprise Add-Ons: Third-party consultancies may offer hosted APIs or premium support.

There’s no official money-back guarantee—since it’s free. Community contributions drive ongoing improvements.

Pros & Cons

| Pros | Cons |

| ✔ Photorealistic Motion: Coherent head/body gestures and facial expressions | ✖ Low Default Resolution: 256×256 may appear soft on large screens |

| ✔ Accurate Lip Sync: Frame-level mask removes chewing artifacts | ✖ CLI-First: No native GUI; Python or ComfyUI skills required |

| ✔ Fully Open-Source: No licensing fees; active repo with 967 stars | ✖ No Formal Funding: Reliant on academic grants, not VC |

| ✔ Easy Integration: Hugging Face demo, ComfyUI node, Python API | ✖ Video Length Limits: Best results under ~10 seconds without advanced tuning |

Comparison to Alternatives

First-Order Motion Model

- Strengths: Simpler, lower compute; decent lip sync.

- Weaknesses: Struggles with full-scene coherence and background motion; no intensity control.

- Where FantasyTalking Wins: Provides consistent head/shoulder motion and dynamic expression scaling.

Meta’s Make-It-Talk

- Strengths: Quick prototyping, integrated demo environments.

- Weaknesses: Oversmooths facial expression; limited pose variation.

- FantasyTalking Advantage: Richer motion intensity and sharper lip alignment.

OmniHuman-1 (Closed-Source SOTA)

- Strengths: State-of-the-art in multimodal human video generation.

- Weaknesses: Closed-source, commercial licensing required.

- FantasyTalking Edge: Open-source access, research-friendly license, faster community support.

Best Practices of using

- Batch-Render Scripts: Automate generation of multiple clips with varied –motion_scale to find the perfect tone overnight.

- Custom Lip Masks: Swap in higher-resolution lip templates for 512×512 outputs—tweak the mask size in infer.py.

- Audio Preprocessing: Normalize and denoise audio to improve lip-sync tightness.

- Community Plug-Ins: Keep an eye on the ComfyUI subreddit for prebuilt node presets that save hours of setup.

Conclusion

Fantasy Talking is a breakthrough for creators and researchers seeking realistic, lip-synced avatars without costly licenses. If you’re comfortable with Python (or willing to learn ComfyUI), it’s an indispensable tool—download and experiment today. Those wanting a polished point-and-click GUI might wait for further community front-ends, but power users will appreciate its flexibility and open nature.