Google has quietly released one of its most radical AI updates yet.

The new Gemma 3 270M model is free, tiny enough to run on smartphones and Raspberry Pi computers, and uses less than 1% battery during extended conversations — setting a new benchmark for on-device AI.

Key Takeaways

- Google launches Gemma 3 270M, a free 270M-parameter AI model.

- Runs locally on phones, browsers, and Raspberry Pi with no cloud needed.

- Consumed just 0.75% battery after 25 chats on Pixel 9 Pro.

- Designed for fine-tuning, business tasks, and fast offline use.

- Marks a shift toward smaller, more practical AI models.

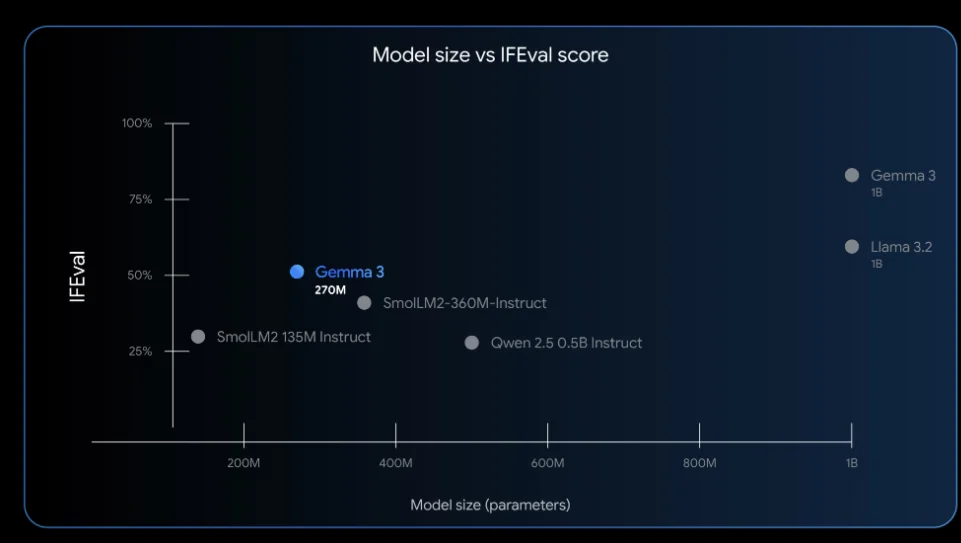

Gemma 3 270M is Google’s new free AI model with just 270M parameters, optimized for on-device use. Despite its small size, it handles complex tasks, consumes less than 1% phone battery during extended use, and supports fine-tuning for business and privacy-focused applications.

Google’s Free Gemma 3 270M AI: Small, Fast, and Everywhere

Google’s latest AI release, Gemma 3 270M, may be its most disruptive yet. At just 270 million parameters, the model is small enough to run locally on smartphones, laptops, and even Raspberry Pi devices — no cloud required.

During internal testing on a Pixel 9 Pro, 25 full conversations with the model used less than 0.75% of the phone’s battery. That efficiency makes it one of the most practical consumer-facing AI releases to date.

“This marks a real shift in how we think about AI deployment — away from massive, expensive cloud models and toward lightweight, accessible tools,” said Prof. Gary Marcus, cognitive scientist and AI critic, in a recent interview about the trend toward smaller models.

Why Small Models Matter

Most AI models today operate with billions of parameters. By contrast, Gemma’s 270M size means faster fine-tuning, reduced hardware demand, and dramatically lower costs.

Adaptive ML, a partner of SK Telecom, reportedly used an earlier Gemma version to moderate multilingual content more cheaply and accurately than larger models (Google AI Blog.

“Specialized small models can often beat giants for specific tasks,” said Sara Hooker, head of Cohere For AI (MIT Tech Review, 2024). “Gemma is the clearest evidence yet that Google understands this shift.”

Real-World Use Cases

The Hugging Face team already built a bedtime story generator that runs fully offline with Gemma — a demonstration of its privacy benefits, since no data leaves the device.

Businesses, meanwhile, could leverage Gemma for:

- Sorting emails and support tickets.

- Automating compliance checks.

- Extracting key details from contracts.

- Routing customer service queries faster.

One small business owner in Berlin who tested the model told us:

“For the first time, I can build AI tools without paying thousands in server bills. It feels like AI is finally accessible to small companies.”

Why It Matters

Gemma’s free release signals Google’s pivot toward democratizing AI. Instead of competing only on scale, it is betting that smaller, faster, and privacy-first models will define the next wave of AI adoption.

What’s Next

- Hardware makers likely to build AI-optimized phones and laptops.

- Growth of marketplaces selling fine-tuned Gemma models.

- Wider adoption in education, SMEs, and emerging markets.

- Competitors like OpenAI and Anthropic expected to launch similar lightweight models.

Source: Gemma