For most developers, AI tools feel like magic. You type a request, code appears, files change, and somehow the machine “understood” what you wanted. What usually stays hidden is the machinery underneath—the logic that decides when the model should think, when it should act, what tools it can touch, and how it avoids breaking your system.

That machinery is what OpenAI is now pulling into the open with its detailed breakdown of the Codex agent loop. And while the blog post reads like a deep technical explainer, its implications go far beyond Codex itself. It offers a rare look at how modern AI agents are being designed to work safely, efficiently, and predictably inside real developer environments.

This is not just an engineering diary. It’s a signal of where AI-assisted software development is heading.

From “Smart Autocomplete” to Autonomous Co-Worker

For years, AI coding tools were essentially advanced suggestion engines. They completed lines, proposed functions, and occasionally refactored code—but they were passive. Codex represents a different category: an agent.

An agent doesn’t just respond. It plans, checks its work, runs commands, reads files, and adjusts based on what it finds. That shift requires something deceptively simple but incredibly powerful: a loop.

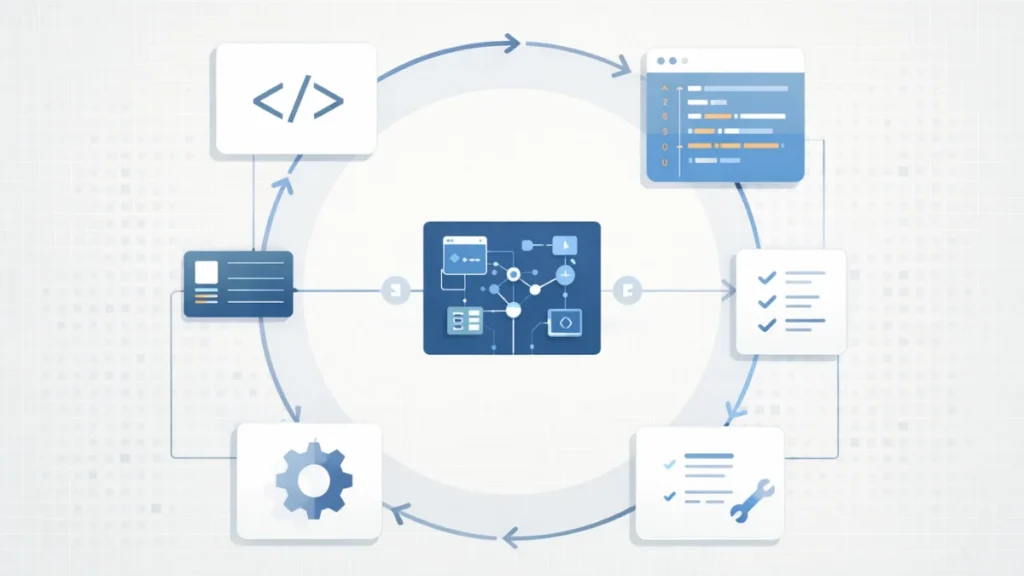

At its core, the Codex agent loop is a cycle:

- Understand the user’s intent

- Decide what to do next

- Use tools to act

- Observe the result

- Update its plan

- Repeat—until the task is done

This loop is what allows Codex to feel less like a chatbot and more like a junior engineer sitting at your terminal.

Why the “Loop” Is the Real Innovation

Large language models are good at generating text. They are not naturally good at interacting with messy, stateful systems like file directories, shells, or build environments. The agent loop bridges that gap.

Instead of asking the model to do everything in one shot, Codex breaks work into steps. It runs a command, sees the output, feeds that result back into the model, and asks, “Now what?”

This is a critical design choice. It mirrors how human developers work:

- We inspect before we change

- We verify before we commit

- We adapt when reality doesn’t match expectations

By formalizing this behavior, Codex moves closer to trustworthy automation.

Safety Isn’t a Feature—It’s the Architecture

One of the most understated but important aspects of Codex’s design is its sandboxing model.

Codex can run shell commands, but not freely. Every tool comes with constraints:

- Defined working directories

- Explicit permission rules

- Clear boundaries between Codex-provided tools and user-defined tools

This matters because an AI agent with unrestricted system access is not a productivity tool—it’s a liability. Codex’s loop ensures that even when the model requests an action, the agent layer decides whether and how that action is executed.

In other words, intelligence proposes—but infrastructure disposes.

That separation is likely to become a standard pattern across future AI agents.

The Hidden Cost of “Thinking”: Context and Performance

Another key insight from the Codex design is how expensive intelligence can become if left unmanaged.

Every conversation with an AI agent grows over time. Every command, result, and response adds tokens. Without careful control, the model eventually hits its context window limit—or becomes painfully slow and expensive.

Codex tackles this with two strategies:

- Prompt prefix stability to maximize cache hits

- Automatic compaction to preserve understanding while shrinking memory

This is not glamorous work, but it’s essential. As AI agents move from demos to daily tools, performance discipline becomes as important as model quality.

The takeaway: building reliable agents isn’t about bigger models—it’s about smarter orchestration.

Why This Matters Beyond Codex

You don’t need to use Codex CLI to feel the impact of this design.

The agent loop described here is rapidly becoming the blueprint for:

- AI coding assistants

- Automated DevOps tools

- Enterprise workflow agents

- Secure on-device AI systems

The emphasis on stateless requests, zero data retention compatibility, and predictable tool use reflects a broader shift in the industry. AI systems are being designed not just to perform, but to behave well under real-world constraints.

That’s a quiet but profound evolution.

What Comes Next

If this first deep dive is any indication, future Codex updates will likely focus on:

- More sophisticated planning tools

- Richer tool ecosystems

- Stronger guarantees around safety and reproducibility

- Better long-running task management

Longer term, expect this agent-loop architecture to bleed into IDEs, CI pipelines, and cloud platforms. The line between “developer” and “AI assistant” will continue to blur—but the systems that succeed will be the ones built with restraint, transparency, and control.

Bigger Picture

AI progress is often measured in parameters, benchmarks, and demos. But real progress shows up in places like this: a carefully engineered loop that decides when a model should speak—and when it should stop.

Codex’s agent loop is not flashy. It doesn’t promise sentience or autonomy. What it offers instead is something far more valuable to working developers: predictable intelligence.

And that may be the most important upgrade of all.