Alibaba’s AI team just dropped Ovis 2.5, a multimodal large language model (MLLM) that’s already making headlines across the globe.

With breakthroughs in visual perception and reasoning, the new 9B and 2B models could reshape how open-source AI handles complex data — from STEM problems to document-heavy workflows.

Key Takeaways

- Alibaba launches Ovis 2.5 multimodal AI with 9B and 2B variants.

- Native-resolution vision transformer improves OCR, charts, and infographics.

- Outperforms all open models under 40B parameters on OpenCompass.

- “Thinking mode” boosts step-by-step reasoning for STEM and research.

- Lightweight 2B model brings high-end multimodal AI to edge devices.

Alibaba’s Ovis 2.5 is a multimodal AI model that excels in vision and reasoning tasks, offering 9B and 2B parameter variants. With a native-resolution vision transformer and optional “thinking mode,” it outperforms all open models under 40B parameters in benchmarks, making advanced multimodal AI more accessible to researchers and everyday devices.

Alibaba Unveils Ovis 2.5

Alibaba’s AI-driven computing arm has released Ovis 2.5, its latest multimodal large language model (MLLM), in both 9B and 2B parameter versions. The models are already topping open-source leaderboards, setting a new high-water mark for performance in sub-40B AI systems.

Experts are calling it one of the most important open-source AI releases of 2025.

Native-Resolution Vision Breakthrough

A standout feature in Ovis 2.5 is its native-resolution vision transformer (NaViT). Unlike earlier open models that required tiling or resizing images — often losing detail — Ovis 2.5 processes visuals at full fidelity. This means everything from cluttered receipts to complex medical charts can be analyzed with higher accuracy.

“Maintaining image integrity at variable resolutions is a game-changer for document-heavy industries,” said Dr. Tianlong Chen, an AI researcher at Rice University (Rice University, 2024). “This pushes open models closer to real-world usability.”

Reasoning With Reflection

Ovis 2.5 also adds a “thinking mode”, where users can trade off speed for deeper reasoning. Instead of rushing to answers, the model reflects, self-corrects, and explains its steps.

“This mirrors how humans double-check their work,” said Sharon Zhou, CEO of Lamini AI. “For STEM education, scientific research, or even math tutoring, this could be transformative.”

On Reddit’s LocalLLaMA forum, early testers said the feature “feels like having a professor pause and explain their reasoning instead of blurting out a solution.”

Benchmark Dominance

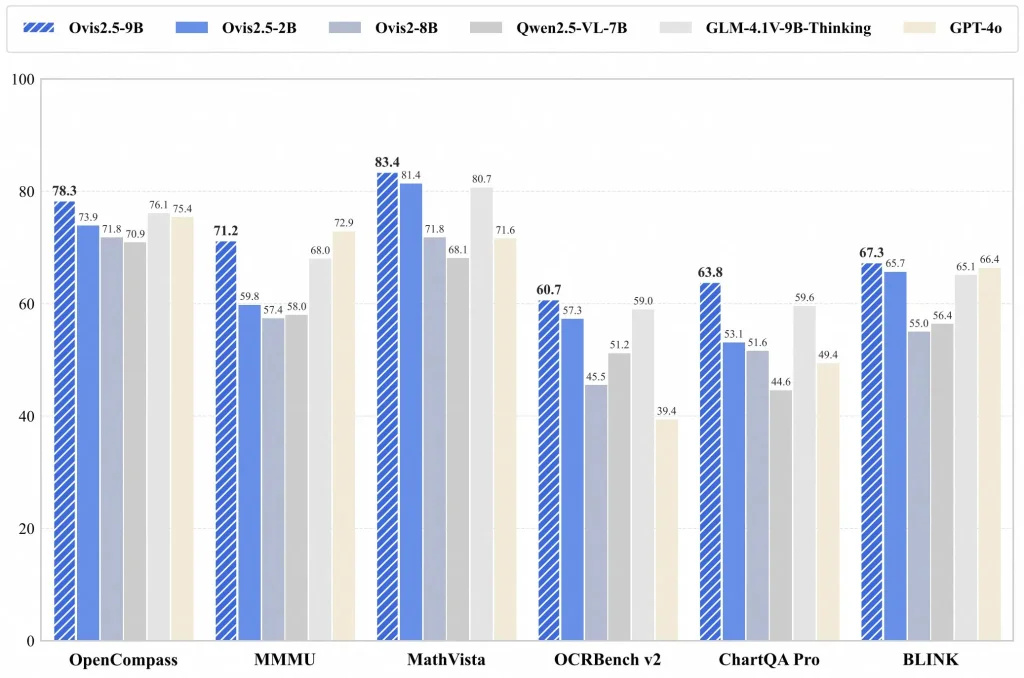

The performance numbers are striking. Ovis 2.5-9B achieved a 78.3 score on the OpenCompass multimodal leaderboard, while the 2B version scored 73.9 — both ahead of every other open-source MLLM under 40B parameters.

The models excel in:

- STEM reasoning (MathVista, WeMath)

- OCR and chart analysis (OCRBench v2, ChartQA Pro)

- Visual grounding (RefCOCO)

- Video comprehension (BLINK, VideoMME)

These benchmarks underline how Ovis 2.5 is narrowing the gap between open and proprietary AI.

High Efficiency, Small Footprint

Training efficiency has also been a focus. Alibaba reports a 3–4× throughput speedup using hybrid parallelism and multimodal data packing. The lightweight 2B version continues the “small model, big performance” philosophy, making advanced multimodal AI viable on mobile hardware and edge devices.

Why It Matters

Open-source AI has long lagged behind commercial labs in handling dense visuals and reasoning-heavy tasks. Ovis 2.5 narrows that gap significantly — giving researchers, startups, and educators access to tools that previously required costly, proprietary models.

Numbers to Watch

- 78.3 — Ovis 2.5-9B score on OpenCompass leaderboard.

- 3–4× — Reported training throughput improvement.

- 2B parameters — Lightweight variant optimized for mobile/edge use.

What’s Next

- Wider adoption in education and STEM tutoring platforms.

- Potential integration into enterprise OCR and workflow tools.

- Expansion of “thinking mode” into more open-source projects.

- Growing pressure on closed AI labs to match open-source speed.

Conclusion

Alibaba’s Ovis 2.5 isn’t just another AI model release. It’s proof that open-source can now rival proprietary systems in visual understanding and reasoning. As these capabilities move onto phones and laptops, the line between research-grade AI and consumer-grade tools is blurring fast.

The big question: will Ovis 2.5 spark a new wave of open-source adoption, or will closed AI labs double down to stay ahead?