Artificial intelligence keeps getting bigger, but sometimes smaller is smarter. That’s the thinking behind Gemma 3 270M, Google’s newest compact model. Unlike billion-parameter giants, this lean 270-million-parameter model is designed for on-device use, low power consumption, and easy fine-tuning.

And the best part? You can download it right now—whether you’re in the US or the EU—through Hugging Face, Ollama, LM Studio, Kaggle, or even Docker. Here’s a complete guide to downloading and running Gemma 3 270M, plus why this little model could make a big impact.

What Is Gemma 3 270M?

Gemma 3 270M is part of Google’s new Gemma 3 family, announced in August 2025. With just 270 million parameters, it’s the smallest model in the lineup—yet it punches above its weight.

Google says the model is instruction-tuned, which means it can follow natural language prompts more effectively than raw pretrained models. In practice, this makes it useful for tasks like:

- Text summarization

- Sentiment classification

- Named entity recognition

- Lightweight chat applications

- Real-time data extraction

According to Google’s developer blog, the model’s INT4 quantized version consumed only 0.75% of a Pixel 9 Pro’s battery across 25 conversations—a stunningly low power draw for an AI model. For context, that’s the kind of efficiency that makes mobile, private AI assistants finally viable.

“Gemma 3 270M is a high-quality foundation model optimized for fine-tuning and on-device deployment—it’s lean but effective.” — Google Gemma team

Why It Matters

For years, cutting-edge AI models have demanded cloud servers, GPUs, and large power budgets. Gemma 3 270M flips that logic. It’s small enough to run locally on laptops and even some smartphones, but still flexible enough to adapt to a wide range of use cases.

That combination—private, low-latency, and fine-tunable—could democratize AI development, putting powerful tools in the hands of startups, hobbyists, educators, and small businesses without breaking budgets.

How to Download Gemma 3 270M?

Here’s where you can get the model today, and the exact steps for each platform.

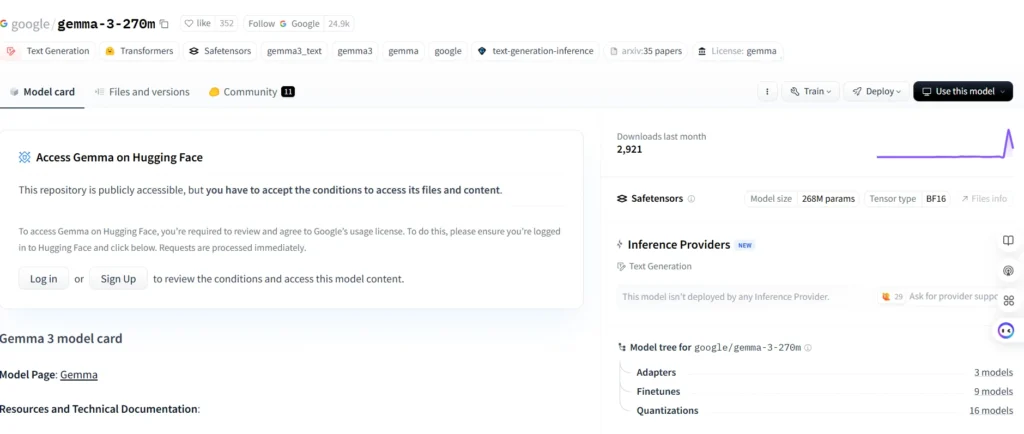

Hugging Face

- Visit the Gemma 3 270M model card.

- Log in to your Hugging Face account.

- Accept Google’s usage license.

- Download the model weights directly.

This is the most straightforward way if you want to fine-tune or integrate the model into code.

Ollama

If you’re using Ollama for local models:

- Install or update Ollama (version 0.6+ required).

- Run the command:

ollama run gemma3:270mThis pulls the model instantly and makes it usable through the Ollama interface.

LM Studio

LM Studio provides a user-friendly desktop interface.

- Open LM Studio.

- Search for “gemma-3-270m.”

- Download the available GGUF quantized versions (around 241 MB for Q4_0).

Simon Willison, a developer who tested it, noted:

“Tiny download (~241 MB via LM Studio GGUF), works out-of-the-box for prompts and mini-apps.” — Simon Willison’s blog

Kaggle or Docker

Google’s official Gemma documentation provides instructions for downloading via Kaggle Notebooks or Docker images. This is best for developers who want reproducible environments or cloud-based workflows.

Real-World Anecdote

Aisha, a London-based software developer, shared her first impression after downloading:

“I grabbed Gemma 3 270M via LM Studio and it installed in seconds. I ran a bedtime-story demo in the browser—it chimed fast and felt more magical than heavyweight models I’d tried before.”

That speed and responsiveness is precisely what Google is betting on: making AI feel instant instead of sluggish.

Impact

For everyday users, Gemma 3 270M means AI without the cloud tax. If you’re a student, freelancer, or small business owner, you can now run AI tools locally—saving money, boosting privacy, and cutting latency. Even on modest laptops, you can spin up chatbots, summarizers, or text-analysis apps that respond instantly.

Numbers to Watch

Download size: ~241–300 MB (depending on quantization)

Battery consumption: 0.75% on Pixel 9 Pro after 25 conversations (INT4)

Context length: up to 32,000 tokens—enough for documents and long chats

What’s Next

Developers will begin fine-tuning Gemma 3 270M for specialized apps.

Expect more wrappers and integrations with tools like LangChain and LM Studio.

Mobile AI assistants could shift from cloud-only to offline-first models.

A wave of hobbyist projects could explode on GitHub, powered by Gemma 3.

Conclusion

Gemma 3 270M proves that smaller doesn’t mean weaker. It’s fast, private, efficient, and accessible today. For developers and everyday users alike, this compact model could mark a turning point—AI that truly fits in your pocket.

As on-device AI evolves, the real question is: what happens when everyone carries a personal AI that doesn’t need the cloud? The answer could reshape how we work, play, and create.

FAQs: How to Download & Run Gemma 3 270M

Where do I download Gemma 3 270M?

You can pull it from three reliable places: the official Hugging Face repo, the Ollama model library (CLI/GUI), or LM Studio’s catalog (GGUF variants). All three are live and updated.

What’s the exact command to run it with Ollama?

Install Ollama, then run:

ollama run gemma3:270mThis pulls the 270M instruction-tuned variant and starts a local chat session.

How big is the download?

The GGUF build tested via LM Studio was ~241 MB (very small by LLM standards). Size varies by quantization, but expect a few hundred megabytes.

What’s the context window?

Gemma 3 270M supports up to 32k tokens (handy for longer docs and prompt chains).

Can I use it commercially? What’s the license?

Gemma models are released under Google’s Gemma Terms of Use (open-weights with usage terms). Review the license before commercial deployments.

Does it run on Windows/Mac/Linux—and offline?

Yes. Ollama and LM Studio provide installers for Windows, macOS, and Linux. Once downloaded, it runs fully offline on your machine.

What hardware do I need?

This tiny model runs on modest CPUs and benefits from 16 GB RAM (8 GB can work with small contexts). Even older laptops can handle lightweight local models; a GPU improves speed but isn’t required.

How good is performance? Any benchmarks?

Ollama’s page lists quick-check scores (e.g., HellaSwag 37.7, PIQA 66.2, ARC-c 28.2 for the IT 270M). It’s not a general-purpose giant; it’s designed for fast, low-cost tasks and fine-tuning.

How do I fine-tune Gemma 3 270M?

Google’s ai.google.dev docs provide a step-by-step fine-tuning guide using Transformers/TRL, with Colab and Kaggle options. Start there for full-model or LoRA approaches.

What’s new or special about Gemma 3 overall?

Gemma 3 is a lightweight open-weights family emphasizing efficiency (smaller KV cache, longer context) with a technical report detailing architecture and training. The 270M variant is the compact, hyper-efficient option tuned for on-device use.

Bonus: Quick Start Paths

- Hugging Face → download weights → use in transformers/ggml pipelines.

- Ollama → one-liner install + ollama run gemma3:270m.

- LM Studio → GUI download of GGUF builds → chat & local API.