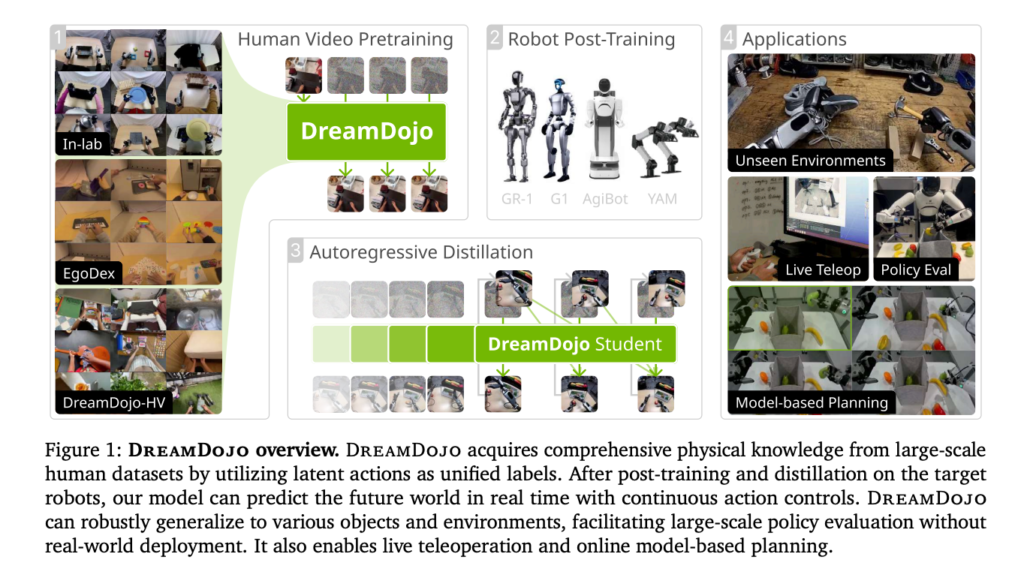

NVIDIA has released DreamDojo, an open-source robot world model trained on 44,711 hours of real-world human. Instead of relying on traditional physics engines, DreamDojo predicts the outcome of robot actions directly in pixels, effectively “dreaming” the next state of the world. The system is available with full model weights, training code, and benchmarks, marking one of the most ambitious open robotics releases to date.

The launch positions NVIDIA not just as a chip supplier, but as a foundational model provider for physical AI.

Key Summary

- DreamDojo is trained on 44,711 hours of egocentric human video spanning 6,015 tasks and 9,869 scenes.

- It replaces manually coded physics engines with learned visual prediction.

- Two model sizes were trained: 2B and 14B parameters, using 100,000 H100 GPU hours.

- A distilled version runs in real time at 10.81 frames per second, stable for 60-second simulations.

- Simulated results show a 0.995 correlation with real-world outcomes.

- NVIDIA has released full weights and training code, enabling post-training on custom robot data.

Shift From Engines to Learned Physics

For decades, robotics simulation required carefully programmed physics rules and detailed 3D modeling. Engineers manually tuned friction, collision, and gravity parameters. The process was time-consuming and often brittle.

DreamDojo moves away from rule-based simulation toward learned physical intuition.

Rather than calculating physics step by step, the model learns how the world behaves by watching humans interact with it — pouring liquids, folding clothes, packing objects. These actions encode physical laws visually. DreamDojo learns those patterns and predicts what happens next.

In practical terms, robots can now train inside a virtual environment that behaves more like reality — without engineers hand-coding every physical rule.

For robotics teams, that means fewer simulation bottlenecks and potentially faster iteration cycles.

Solving Robotics’ Data Bottleneck

The central constraint in robotics AI has always been data collection.

Collecting robot-specific trajectories is expensive and slow. Hardware breaks. Environments vary. Scaling is difficult.

DreamDojo sidesteps this constraint by pretraining on 44,711 hours of egocentric human video — the largest dataset of its kind for world model training.

The DreamDojo-HV dataset includes over one million trajectories and more than 43,000 unique objects. Humans have already mastered complex physical tasks. By observing them, the model acquires a baseline understanding of motion, object behavior, and causality.

This mirrors what large language models did for text — scaling first, specializing later.

Making Human Video Robot-Readable

Human videos do not include robot motor commands. That creates a translation problem.

To solve it, NVIDIA introduced continuous latent actions.

A spatiotemporal Transformer-based variational autoencoder (VAE) analyzes two consecutive frames and compresses their motion into a 32-dimensional vector. In simple terms, it converts visual change into a compact action representation.

This creates an information bottleneck that separates motion from visual noise.

The result is a hardware-agnostic action interface that can transfer learned physical intuition across different robot bodies.

For operators, this is strategically significant. If latent actions generalize across hardware platforms, DreamDojo becomes a reusable foundation rather than a robot-specific model.

Architecture Designed for Generalization

DreamDojo builds on the Cosmos-Predict2.5 latent video diffusion model and integrates the WAN2.2 tokenizer with temporal compression.

The team introduced three key refinements:

- Relative actions using joint deltas instead of absolute poses, improving trajectory flexibility.

- Chunked action injection, aligning four actions per latent frame to preserve causal consistency.

- Temporal consistency loss, reducing visual artifacts and enforcing physically coherent motion.

The 14B model achieved 73.5 percent physics correctness and 72.55 percent action-following accuracy, outperforming the 2B variant.

These numbers indicate measurable improvement, though broader real-world validation remains necessary.

Real-Time Interaction Through Distillation

Diffusion models are typically too slow for robotics simulation. Standard inference requires dozens of denoising steps.

NVIDIA addressed this through a Self-Forcing distillation pipeline trained on 64 H100 GPUs.

The student model reduces denoising from 35 steps to just 4 steps.

The outcome is 10.81 FPS real-time performance, with stable rollouts lasting 600 frames, or about 60 seconds.

For robotics development, that threshold matters. Below real-time, interactive planning and teleoperation become impractical.

At roughly 10 FPS, DreamDojo transitions from research prototype to usable simulator.

Downstream Performance Signals

Two evaluation results stand out.

- First, policy evaluation inside DreamDojo achieved a Pearson correlation of 0.995 with real-world success rates. In practical terms, simulated results closely matched physical testing outcomes.

- Second, model-based planning improved real-world fruit-packing success rates by 17 percent, doubling performance compared to random sampling approaches.

If reproducible, that level of predictive fidelity could significantly reduce hardware testing risk and iteration cost.

Competitive Context

Several research labs and startups are developing robotics world models. What differentiates DreamDojo is scale, open release, and real-time distillation.

By releasing full weights and training infrastructure, NVIDIA is positioning itself as an infrastructure layer for robotics AI — not merely a hardware vendor.

Traditional physics engine developers should monitor this shift closely. If learned simulators reach consistent reliability, rule-based engines may gradually lose dominance.

The risk remains generalization. Human video pretraining does not guarantee performance across unseen industrial environments.

Enterprise adoption will depend on whether DreamDojo’s simulated accuracy holds in high-stakes, safety-critical settings.

The next milestone to watch is third-party validation. If robotics startups and enterprise labs demonstrate reproducible transfer gains using DreamDojo, it could become the default pretraining backbone for physical AI. If those results do not materialize, it may remain a technically impressive but niche research release.

The market will decide based on real-world transfer, not benchmark optics.