When AI labs are locking down models faster than ever, the Allen Institute for AI (Ai2) just dropped a rare counter-move: Olmo 3, a fully open model family where every step of the development pipeline is exposed — not just the final weights.

It’s not just new LMs. It’s transparency as a feature.

Why this matters now

Modern AI models are built like black boxes: trained on opaque data, tuned with undisclosed methods, and shipped with zero insight into how they actually think.

Olmo 3 flips that script by publishing the full model flow—from datasets to intermediate checkpoints to RL training stages—plus a new “Think” lineage that reveals reasoning traces you can inspect in real time.

If you’ve ever wished you could actually see why a model arrives at an answer, this is that moment.

The big release: Olmo 3 in a nutshell

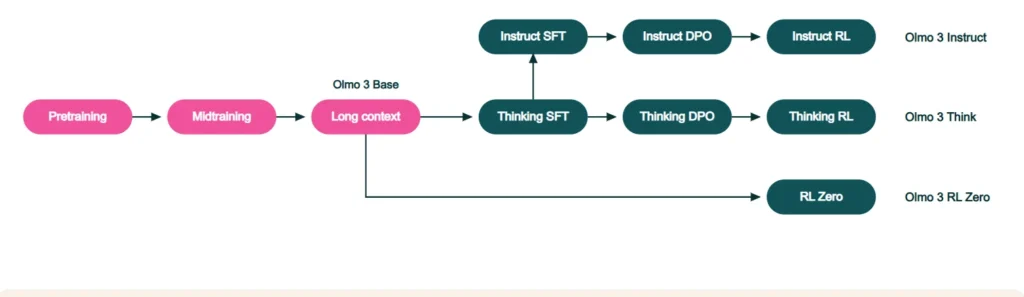

Ai2’s new suite arrives in four flavors:

• Olmo 3-Base (7B, 32B)

The strongest fully open base model Ai2 has released. It beats or competes with open-weights systems like Gemma 3, Qwen 2.5, Marin 32B, and Apertus 70B across reading comprehension, math, programming, and long-context tests (up to ~65K tokens).

• Olmo 3-Think (7B, 32B)

The flagship.

This is a reasoning-first model designed to expose step-by-step thinking, backtrace behavior to training data, and serve as an RL research workhorse. The 32B version competes head-to-head with Qwen 3 32B and even matches top reasoning models on benchmarks like MATH, OMEGA, HumanEvalPlus, and BigBench Hard.

• Olmo 3-Instruct (7B)

A smaller, chat-optimized model that outperforms many open-weight systems of its class on instruction following, tool use, and conversational tasks. It’s aimed at agents, copilots, and general chat experiences.

• Olmo 3-RL Zero (7B)

A fully open RL pathway with checkpoints for math, code, instruction following, and general chat — designed specifically so researchers can benchmark RL techniques with verifiable rewards.

The real breakthrough: the open model flow

Here’s the twist that sets Olmo 3 apart from every other open model release in 2025:

Ai2 didn’t just drop weights — they published the entire pipeline.

That includes:

- Dolma 3 (a new ~9.3T-token corpus)

- Dolma 3 Mix (~6T training mix with heavier math/code)

- Dolmino (mid-training mix focused on harder distributions)

- Longmino (long-context mix)

- Dolci (post-training SFT/DPO/RL mixes)

- Full checkpoints from every stage

- Tooling for decontamination, deduplication, evaluation, and data tracing

This means anyone can fork the model at any stage, inject domain-specific data, run their own SFT/DPO/RL experiments, or audit why the model behaves the way it does.

It’s an unprecedented level of transparency — bordering on academic-grade reproducibility.

Why this hits the industry now

Ai2’s move lands during a tense moment for open-source AI.

Most high-performing “open” models in 2025 ship only weights, with no data, no training code, and no reasoning transparency. Researchers call them “open-weight, closed-everything-else.”

Olmo 3 breaks that trend by:

- Publishing datasets

- Opening every training stage

- Making reasoning inspectable

- Releasing full data recipes

- Releasing RL pathways

- Enabling source-level traceability

This is the kind of radical openness many researchers have been calling for since 2023.

What’s next

Ai2 says Olmo 3 is only the beginning. More model flows, more toolkits, and more reasoning-focused releases are coming — especially around the 32B scale, which the institute calls a “sweet spot” for real research and practical deployment.

If the community picks this up, Olmo 3 could become the new default scaffold for open-source LLM development.