A new leak points to OpenAI launching a powerful open-source AI model, possibly within hours. With 120B parameters and advanced architecture, this move could reshape the AI ecosystem—and silence critics of OpenAI’s recent closed-door strategy.

OpenAI’s Return to Openness? Massive 120B Open-Source AI Leak Suggests So

In a development sending shockwaves through the AI community, OpenAI may be on the verge of launching a game-changing open-source model—and it could be happening any minute now.

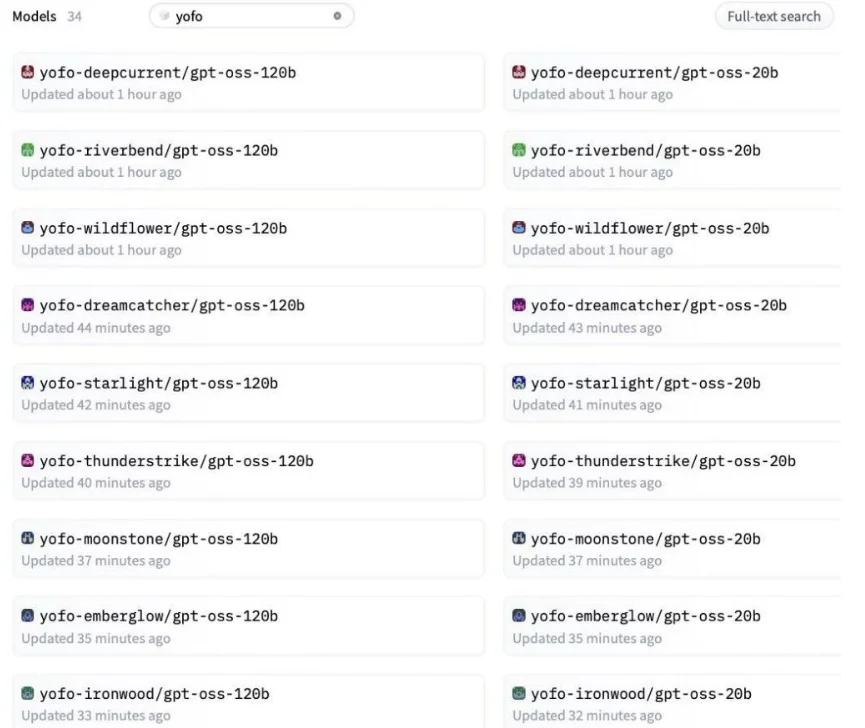

The leak surfaced via GitHub-like repositories that briefly went live under usernames linked to OpenAI team members. These repositories, with cryptic names like yofo-deepcurrent/gpt-oss-120b, were quickly taken down—but not before developers captured screenshots and unearthed crucial configuration files.

The term “gpt-oss” is telling. Most interpret it as GPT Open Source Software, a surprising shift from a company that has grown increasingly protective of its top-tier models. And the presence of multiple model sizes—from 20B to 120B—suggests a coordinated launch, not a one-off experiment.

Key Takeaways:

- Leak reveals: 120B parameter AI model likely built on Mixture of Experts (MoE) architecture.

- Efficient design: Only 4 of 128 “expert” nodes activate per query—faster performance at scale.

- Competitive edge: Rivals Meta’s LLaMA and Mistral’s Mixtral in open-source AI space.

- Multilingual power: Huge vocabulary size & Sliding Window Attention = smoother long-text handling.

- Strategic timing: Likely response to criticism of OpenAI’s closed strategy & rising open-source rivals.

The leaked configuration shows this isn’t just another large language model—it’s a Mixture of Experts (MoE) powerhouse. Rather than a single giant brain, it operates more like a council of 128 expert modules. For any task, only four are selected, allowing it to perform like a lightweight model while drawing on the knowledge of a much bigger system.

This approach echoes the strategies of Mistral’s Mixtral and Meta’s LLaMA models, both of which have dominated the open-source discussion. But if OpenAI drops its own version—especially one with 120 billion parameters—it would be nothing short of seismic.

And this time, OpenAI seems to be doing more than just matching specs. The model reportedly boasts an extended vocabulary range and Sliding Window Attention, a technical feature that improves handling of longer conversations or documents. For developers, that means smoother performance, fewer limits, and broader application scope.

But here’s the real story: why now?

OpenAI has faced mounting criticism for moving away from its open-source roots. Early supporters felt alienated as the company pivoted toward closed models like GPT-4 and enterprise partnerships. Releasing a model like this could be a strategic reset—a way to rekindle trust, ignite developer enthusiasm, and win back lost goodwill.

It’s also a sharp competitive move. Meta and Mistral have proven that open models can spark community-driven innovation. By launching a massive model into the wild, OpenAI isn’t just responding—it’s rewriting the playbook.

So, while the company hasn’t confirmed anything publicly, this leak comes with weight: real repositories, real configs, real excitement. If true, we’re looking at one of the most significant open-source AI releases to date.

All eyes now turn to OpenAI. The countdown has unofficially begun.

Source Link: AI News