A new open-source project is pushing AI assistants to the extreme edge of hardware limits.

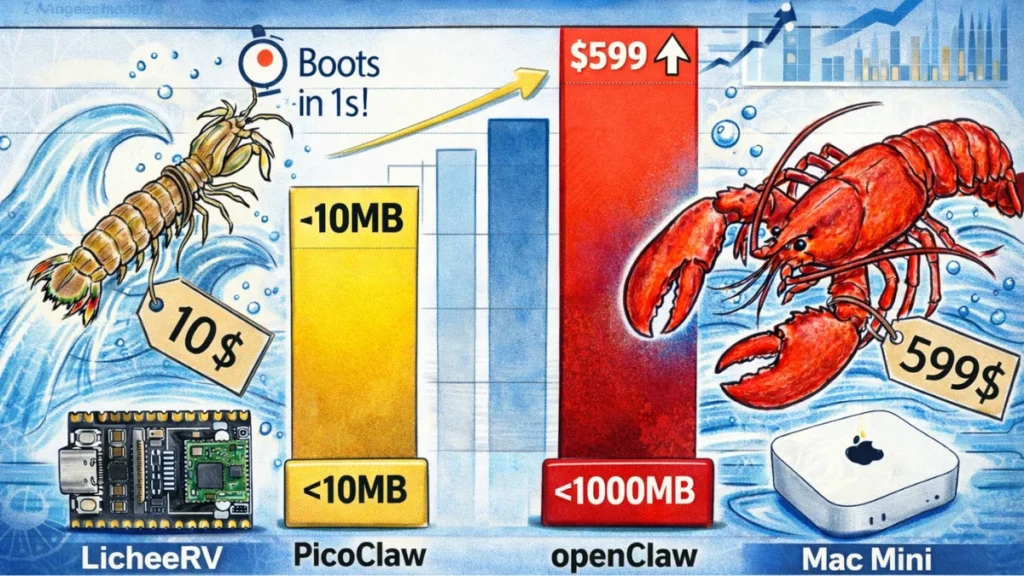

Called PicoClaw, the lightweight tool runs as a functional AI agent on devices with under 10MB of memory — hardware typically considered too small for modern AI workloads.

The project highlights a growing shift toward minimal, efficient AI agents designed for constrained environments rather than cloud-heavy systems.

What Just Happened

GitHub user Sipeed has released PicoClaw, an ultra-lightweight AI assistant written in Go, as an open-source project.

According to its repository, PicoClaw:

- Runs on devices with <10MB RAM

- Boots in approximately 1 second

- Works on ARM, RISC-V, and x86 architectures

- Can run on hardware costing roughly $10

PicoClaw operates as a command-line AI agent and supports multiple large language model backends through API connections, including OpenAI-compatible endpoints and third-party LLM gateways.

The maintainers note that parts of the codebase were generated or optimized using AI models during development.

Why This Matters

Most AI assistants today assume powerful CPUs, abundant memory, and persistent cloud connectivity.

PicoClaw targets a different class of deployment: embedded systems, edge devices, industrial hardware, and low-power boards where resources are limited and reliability matters more than raw capability.

By reducing the runtime footprint to single-digit megabytes, the project lowers the barrier for integrating AI-driven workflows into environments traditionally excluded from modern AI stacks.

Expert Analysis

PicoClaw is not attempting to perform local inference or compete with full-featured AI assistants. Instead, it adopts a thin-agent architecture: a minimal local runtime paired with remote intelligence via APIs.

This design choice reflects a broader trend in AI tooling. Rather than embedding models directly on-device, developers are separating orchestration, control, and interaction from model execution.

The result is an agent that behaves more like a systems utility than a chatbot — small, fast, composable, and easy to deploy across diverse hardware.

Comparision

Compared with popular AI agent frameworks that rely on Python, large dependencies, and long startup times, PicoClaw emphasizes:

- A single compiled Go binary

- Minimal memory overhead

- Fast cold starts

- Broad hardware compatibility

It fits naturally into environments where reliability, simplicity, and footprint are more important than advanced user interfaces.

What Happens Next

PicoClaw is still early-stage, with limited contributors and modest visibility so far.

Its future will depend on whether developers adopt it for real-world use cases such as automation, monitoring, or offline-capable assistants — and whether the community expands its tooling and integrations.

If adoption grows, the project could become a reference point for how small AI agents can realistically be.

Conclusion

PicoClaw doesn’t argue that AI should be bigger or more powerful.

It makes a quieter case: that AI can be small, fast, and everywhere — even on hardware most modern systems ignore.

As AI infrastructure continues to diversify, projects like PicoClaw show that efficiency, not scale, may define the next wave of practical AI tools.