An open source 380M parameter model aims to standardize and reconstruct fragmented EEG signals, a step toward practical thought to text research.

Brain computer interface research has long been constrained by a problem that rarely gets attention outside labs messy data. Electroencephalography, or EEG, is widely used across research hospitals, universities, and even consumer headsets. But recordings are often incomplete, noisy, or incompatible across devices. Entire sessions get discarded because a few electrodes fail.

San Francisco based startup Zyphra is betting that this bottleneck not hardware is what’s slowing progress.

On Tuesday, the company released ZUNA, a 380 million parameter foundation model trained on EEG data. The model is designed to denoise, reconstruct, and upsample scalp recordings across different channel layouts. Zyphra says it trained the system on roughly 2 million channel hours of publicly available EEG data and is releasing the weights and tooling under an Apache 2.0 license.

The company frames ZUNA as an early building block toward noninvasive thought to text systems. For now, its utility is more grounded helping researchers recover and standardize brain signal data that would otherwise be difficult to use.

Why EEG Has Lagged Behind Other Modalities

In AI, foundation models have reshaped text, vision, and speech processing. EEG has not followed that trajectory.

Unlike language datasets scraped from the web, EEG data is fragmented. Studies are small. Electrode configurations vary. Protocols differ by institution. Hardware ranges from dense lab setups to lightweight consumer headsets.

That fragmentation has made it difficult to pretrain large, general purpose models on neural signals.

ZUNA is an attempt to apply the same pretraining logic used in language and vision models to EEG. Instead of building task specific decoders for one experiment at a time, Zyphra is training a general representation model meant to work across datasets and electrode configurations.

If it works as described, that could reduce a recurring inefficiency in neuroscience and BCI development repeatedly cleaning and interpolating signals from scratch for each study.

Replacing a Default That Has Not Changed Much

Most EEG practitioners rely on spherical spline interpolation to estimate missing channels. The method, included by default in the widely used MNE software package, smooths signals across the scalp using geometric assumptions.

It is computationally simple. It is also limited.

Spherical splines perform reasonably well when only a few electrodes fail. But as dropout increases whether from motion artifacts, hardware faults, or low density headsets signal quality deteriorates.

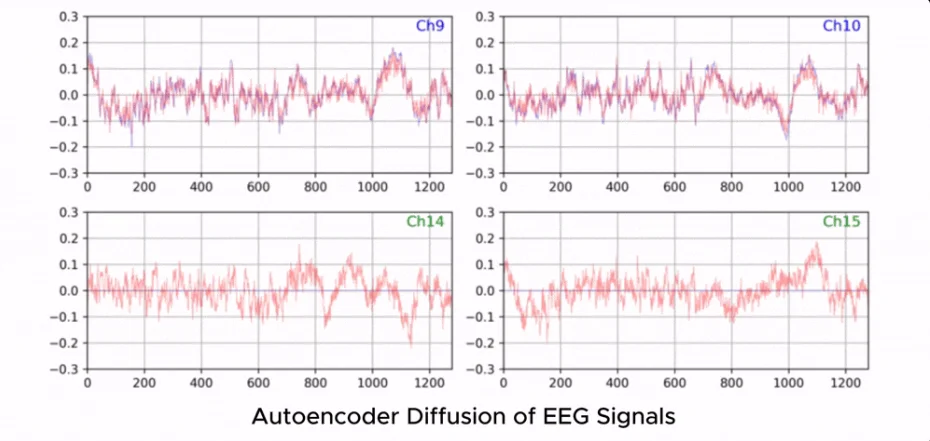

ZUNA replaces that interpolation step with a learned diffusion autoencoder built on a transformer backbone to reconstruct signals from partial inputs. According to Zyphra’s internal validation tests, the model outperforms spherical spline interpolation across datasets, with the gap widening when more than 75 percent of channels are missing.

The company evaluated performance on held out validation data and on separate test datasets with different distributions. Independent replication will ultimately determine how broadly those gains hold up, but the benchmark choice reflects a real world baseline rather than a synthetic comparison.

What Technically Changed

At a high level, ZUNA encodes EEG signals into a shared latent space and reconstructs them from that representation. During training, the model uses masked reconstruction and heavy dropout so it learns to predict missing channels and denoise corrupted ones.

Two architectural choices stand out.

First, EEG signals continuous waveforms with varying channel counts are compressed into short time chunks that are mapped into token like representations. Those tokens are arranged into a one dimensional sequence that a standard transformer can process.

Second, the model encodes electrode positions using a four dimensional rotary positional embedding scheme. Instead of assuming a fixed montage like the 10 20 system, ZUNA operates directly on electrode coordinates. That allows it to generalize across arbitrary layouts.

At 380 million parameters, the model is relatively modest by current AI standards. Zyphra says it runs on consumer GPUs and can operate on CPUs for many workloads, lowering the barrier for labs without large compute budgets.

Where It Fits Into Real Workflows

The practical implications are less about mind reading and more about salvage.

EEG datasets frequently include partially corrupted sessions. A few bad channels can render an otherwise valuable recording unusable. ZUNA’s ability to reconstruct or denoise signals could effectively increase usable sample sizes without additional data collection.

That has downstream impact on statistical power, especially in small clinical or cognitive studies.

The model also attempts to bridge the gap between low channel consumer devices and higher density lab systems by mapping sparse inputs into higher resolution signal space. Whether that translation preserves meaningful physiological detail will be a key question for researchers.

Importantly, Zyphra includes a research use only disclaimer. The model is not validated for diagnosis or clinical decision making, and the company explicitly states it is not intended for medical use.

Open Source as Strategy

ZUNA is being released with model weights on Hugging Face, inference and preprocessing code on GitHub, and a pip installable package. The Apache 2.0 license allows commercial use.

That choice suggests Zyphra is prioritizing ecosystem development over tight IP control at least for this stage. Foundation models in other domains have benefited from community fine tuning and benchmarking. EEG may require similar collective validation.

The company also says it plans to open source its data infrastructure, though details on timing remain unclear.

In a field where reproducibility and dataset access are persistent challenges, transparency could influence adoption as much as raw performance.

What I Will Be Watching Next

The immediate test will be independent validation. Researchers will want to see how ZUNA performs on niche datasets, rare electrode configurations, and edge case artifacts not covered in Zyphra’s training corpus.

I will also be watching whether BCI startups adopt the model into production pipelines or treat it as a research curiosity.

Thought to text remains an ambitious long term goal. ZUNA does not deliver that today. What it does offer is something more pragmatic a scalable foundation model for cleaning and reconstructing EEG data at scale.

If that layer becomes reliable and widely adopted, it could quietly accelerate progress in a field that has struggled more with data quality than with ambition.