The AI boom is pushing data centers to their energy limits. An Israeli startup says it can change that—by rethinking how CPUs are built from the ground up.

When most people in the chip industry heard NeoLogic’s plan, they said it couldn’t be done. The founders, Avi Messica and Ziv Leshem, were told there was no room left for innovation in CPU logic design. But three years later, the Israeli startup is proving that conventional wisdom might just be wrong.

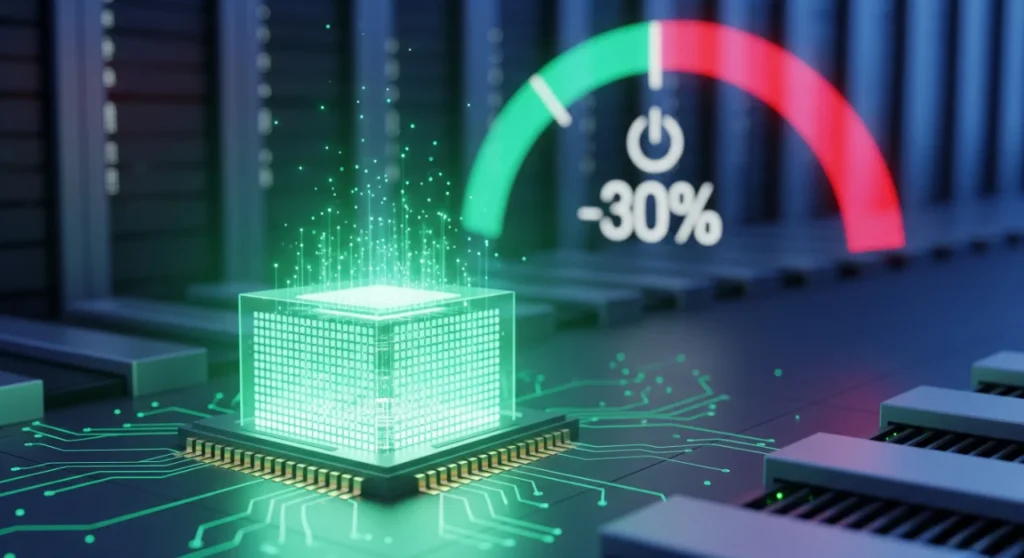

Founded in 2021, NeoLogic is building a new class of energy-efficient server CPUs for AI data centers—using fewer transistors and simplified logic to run faster while consuming less power. It’s a bold bet at a time when AI infrastructure is straining the world’s energy supply.

Messica, NeoLogic’s CEO, recalls how critics insisted, “You can’t innovate in circuit design—it’s too mature.” His response? “Moore’s Law might be slowing, but that doesn’t mean progress has to.”

Key Takeaways

- Energy savings up to 30%: Lower power consumption could dramatically reduce data center costs.

- Smaller, simpler logic: Fewer transistors mean less heat and faster performance.

- Backed by major funding: $10M Series A led by KOMPAS VC, with big-name partners undisclosed.

- Market-ready by 2027: Single-core test chip expected by year’s end.

Leshem, the CTO, spent decades designing chips at Intel and Synopsis. Messica worked on circuit design and manufacturing. Together, they’ve built NeoLogic around a simple but radical idea: stop chasing ever-smaller transistors, and instead rethink how they’re arranged and used.

“About a decade ago, the industry stopped pushing transistor sizes much smaller,” Messica explained. “But the way chips process information hasn’t changed much. We decided to break that mold.”

NeoLogic’s approach could be a lifeline for hyperscalers, the massive tech companies operating sprawling AI data centers. Power usage from these facilities is expected to double in the next four years—driving up costs, straining grids, and even influencing water usage.

By cutting energy demand by as much as 30%, NeoLogic says its CPUs could also lower construction costs for new facilities and reduce capital expenditure. “It’s not just an engineering challenge—it’s about societal impact,” Messica said.

The company isn’t naming its hyperscaler partners just yet, but it plans to have test chips ready by late 2025 and full-scale deployment in data centers by 2027.

For investors, the timing is perfect. As AI workloads surge, efficiency is becoming just as important as raw processing power. If NeoLogic delivers, it won’t just make CPUs—it could help redefine the economics of AI itself.