Alibaba’s open-weight release signals a shift from pure scale to agent-grade efficiency

AI models keep getting bigger. The harder question now is whether they’re getting economically deployable.

With the release of Qwen3.5-397B-A17B, Alibaba is making a case that scale alone is no longer the headline. The new open-weight model contains 397 billion total parameters — but activates just 17 billion during each forward pass.

That design choice matters more than the raw parameter count. It speaks directly to the cost, latency, and infrastructure realities enterprises face when turning experimental AI agents into production systems.

Why Efficiency Is the Real Story

Frontier AI over the past two years has largely been a race toward trillion-parameter territory. But dense models scale costs alongside capability. The practical bottleneck isn’t intelligence — it’s inference economics.

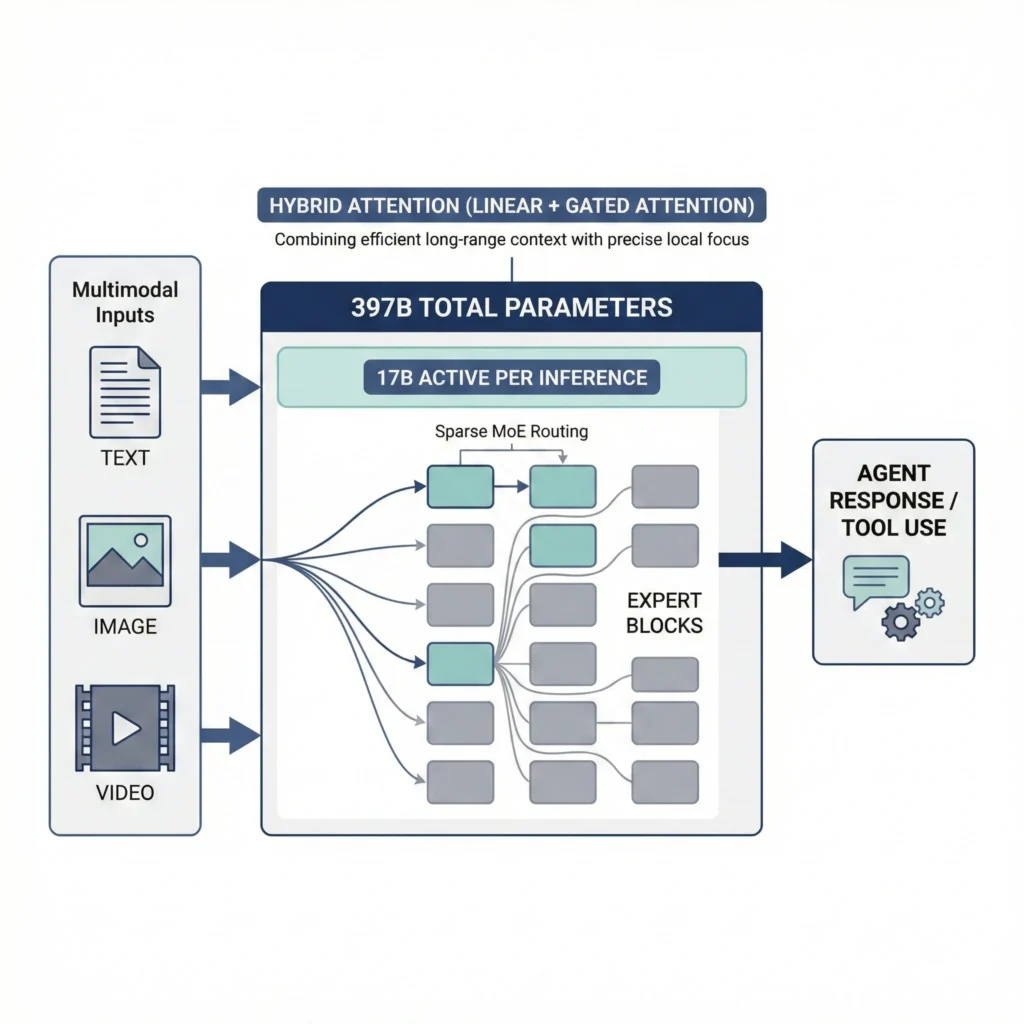

Qwen3.5 uses a sparse mixture-of-experts (MoE) architecture combined with hybrid attention mechanisms. Instead of engaging the entire network for every request, the model routes queries to selected expert blocks. Only a fraction of the model activates at runtime.

In Qwen3.5’s case, that fraction is 17B parameters per pass.

That puts it closer to mid-sized dense models in inference footprint, while retaining the representational capacity of a 397B system. For enterprises running sustained agent workflows — document analysis, coding assistance, multimodal automation — this difference directly impacts cost predictability.

Alibaba reports decoding throughput gains between 8.6x and 19x compared with its earlier Qwen3-Max model, depending on context length. While such claims always depend on deployment environment, the architectural direction is clear: selective scale instead of brute-force scale.

Built for Native Multimodal Agents

Qwen3.5 is positioned as a native multimodal model, meaning text and visual inputs are fused early in training rather than connected through separate pipelines.

That architectural shift aligns with where enterprise AI demand is heading. Agent systems increasingly need to:

- Interpret UI screenshots

- Parse structured documents

- Analyze video

- Generate code

- Coordinate tool calls

The hosted Qwen3.5-Plus version supports a one million token context window. That size is less about bragging rights and more about workflow continuity. Large context reduces the need for aggressive truncation or external retrieval stitching when processing long sessions, complex codebases, or multi-document research tasks.

Multilingual support has also expanded from 119 to 201 languages and dialects. For globally distributed teams — particularly those operating outside English-dominant markets — that broadens deployment viability.

Competitive Positioning Without Benchmark Theater

Alibaba released extensive benchmark data spanning reasoning, coding, multilingual evaluation, and multimodal tasks. Qwen3.5 performs competitively against leading frontier systems from OpenAI, Anthropic, and Google across several categories.

In coding benchmarks such as SWE-bench variants, it approaches but does not consistently surpass top proprietary models. In multilingual and multimodal evaluations — particularly spatial reasoning and math vision tasks — its relative positioning appears stronger.

Alibaba attributes much of the performance gain over its prior Qwen3 series to expanded reinforcement learning environments rather than narrow benchmark tuning. That distinction is meaningful. Training for generalized agent behavior differs from optimizing for leaderboard metrics.

Still, enterprises evaluating foundation models rarely make decisions based solely on benchmark deltas. Stability under tool use, consistency of structured outputs, and integration friction often outweigh marginal score differences.

Infrastructure Signals a Platform Strategy

Beyond model metrics, Alibaba disclosed system-level investments that suggest longer-term ambitions.

Training relied on heterogeneous parallelism strategies separating vision and language components, along with FP8 pipelines to reduce activation memory and improve throughput. The company also described an asynchronous reinforcement learning framework designed to better support multi-turn agent workflows.

That level of infrastructure disclosure indicates this is not a one-off model release. It reflects a broader platform build-out aimed at scalable agent deployment.

In effect, Alibaba is positioning Qwen3.5 less as a chatbot and more as a base layer for persistent digital agents.

Enterprise and Geopolitical Realities

Qwen3.5 is available as open weights, which lowers barriers for self-hosted experimentation. A hosted version runs through Alibaba Cloud’s Model Studio with tool-use and reasoning toggles.

For U.S.-based enterprises, adoption decisions may hinge on compliance and regulatory review, particularly for hosted deployment. Open weights mitigate some of that friction, but infrastructure and data governance considerations remain central.

That dynamic could shape where Qwen3.5 gains early traction — potentially stronger among research institutions, startups, and globally distributed engineering teams comfortable managing their own deployment stack.

The Bigger Strategic Shift

The more important signal may not be the 397B number. It’s the architectural direction.

Sparse activation, multimodal fusion, long-context handling, and reinforcement learning environments all point toward systems designed for sustained task execution rather than isolated prompt-response cycles.

The industry conversation is moving from “How smart is the model?” to “Can it operate reliably across hours, tools, and modalities?”

Qwen3.5 doesn’t claim to redefine that frontier outright. But it clearly targets it.

What I’ll be watching next is whether developer ecosystems begin integrating Qwen3.5 into agent frameworks at scale — and whether its selective-activation design proves economically compelling in real production environments, not just benchmark tables.

If that holds, the model’s true differentiator won’t be its 397B parameter count. It will be the 17B that actually turn on.